8 bits for a Byte: Get ready for the ultimate AI deep dive! We’re exploring Uber’s Prompt Engineering Toolkit, celebrating ChatGPT’s evolution into an industry juggernaut, and sharing Gartner’s insights on how CIOs can harness AI’s power. Whether you’re scaling enterprise solutions or sparking creative innovation, this week’s highlights are packed with tools, tips, and inspiration to drive success.

Join us for Women in AI: an unforgettable night at the heart of Silicon Valley's AI revolution! Free admission, food and refreshments! (Women and Men are invited!)

If you're frustrated by one-sided reporting, our 5-minute newsletter is the missing piece. We sift through 100+ sources to bring you comprehensive, unbiased news—free from political agendas. Stay informed with factual coverage on the topics that matter.

Let’s Get To It!

Welcome, To 8 bits for a Byte!

Here's what caught my eye in the world of AI this week:

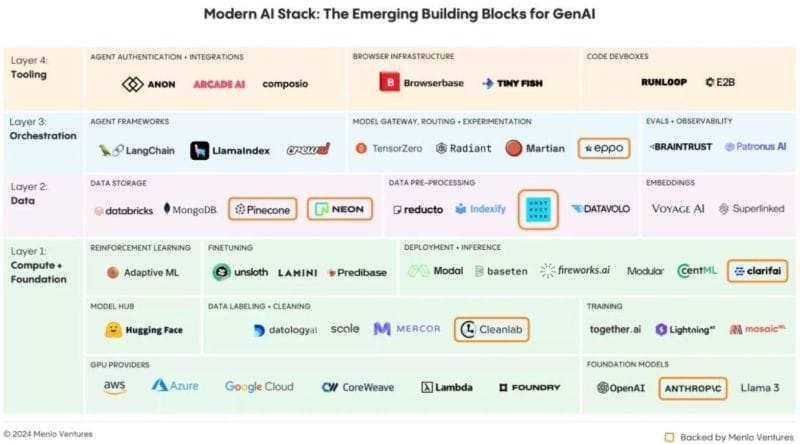

This clear, high-impact overview of the modern AI stack offers a 10,000-foot perspective on how enterprise architecture is evolving to harness the full potential of AI. Discover the key layers, emerging trends, and game-changing opportunities shaping the future of technology today.

Executive Summary

The modern AI stack is undergoing a transformation, shifting from early experimentation to a structured framework that drives enterprise innovation. The new stack is built on four key layers—Compute & Foundation Models, Data, Deployment, and Observability—each enabling enterprises to harness AI's potential efficiently and securely. This evolution has redefined AI development, moving from labor-intensive ML pipelines to product-first approaches powered by advanced large language models (LLMs). As enterprises embrace this new paradigm, they unlock opportunities to innovate faster, reduce costs, and democratize AI capabilities across teams.

Key Takeaways

1. AI Maturity Redefined: From Model-First to Product-First

Traditional AI development required extensive expertise and resources to build custom models. Today, pre-trained LLMs enable teams to start with product-focused innovation, leveraging tools like OpenAI APIs to build applications rapidly. This shift democratizes AI, empowering mainstream developers to drive impactful solutions.

2. Core Infrastructure Layers Powering Innovation

The modern AI stack comprises four essential layers:

Compute & Foundation Models: Training, fine-tuning, and deploying powerful models.

Data: Tools like vector databases and ETL pipelines provide enterprise-specific context.

Deployment: Orchestrating AI applications with agent frameworks and prompt management.

Observability: Monitoring runtime behavior to ensure reliability and security.

These layers collectively form the backbone of enterprise AI systems, ensuring scalability and performance.

3. Key Trends Shaping the Future of AI

RAG Dominance: Retrieval-augmented generation leads in customizing LLMs with enterprise-specific knowledge, ensuring relevance and accuracy.

Proliferation of Small, Task-Specific Models: As enterprises seek cost-effective, domain-specific solutions, fine-tuned models are becoming pivotal.

Serverless Architectures: The shift to serverless computing optimizes costs and simplifies operations, paving the way for seamless scaling and innovation.

This dynamic ecosystem not only accelerates AI adoption but also redefines how enterprises innovate, ensuring AI-driven solutions remain accessible, efficient, and impactful.

Quote of the week

Success demands unwavering self-belief. At the start, it's just you and your idea—a vision you hold onto fiercely. But the second toughest challenge? Letting go of that initial idea to pivot, adapt, and ultimately find product-market fit. That’s where true growth begins.

Last week, I gave you a glimpse of this impactful event, and now I'm excited to share a deeper dive by host Jeremiah Owyang—a thought leader I've followed since the rise of social media. Back in 2018, our Silicon Valley AI group organized a meetup at this very venue. ServiceRocket's unique venue, known for its majestic dome, once again set the stage for innovation and collaboration.

Collaboration in Focus at the Inaugural AI Agent Congress

The Inaugural AI Agent Congress was a significant step toward fostering collaboration in the rapidly evolving AI agent space. Bringing together 31 AI agent founders, investors, and industry leaders, the event provided a platform to discuss key industry challenges and opportunities. With a mix of structured presentations, facilitated group discussions, and an unconference format, attendees explored topics like interoperability, agent standards, and the relationship between startups and tech giants. The venue in Palo Alto provided a welcoming atmosphere for meaningful dialogue, and the diversity of perspectives enriched the discussions.

This event was more than just a gathering—it marked the beginning of an industry-wide conversation. From practical strategies for agent development to deeper explorations of AI’s role in enterprise solutions, the Congress created a foundation for ongoing collaboration. The shared insights and connections made during the day underscore the importance of building a community to shape the future of AI agents thoughtfully and effectively.

Author - Jeremiah Owyang

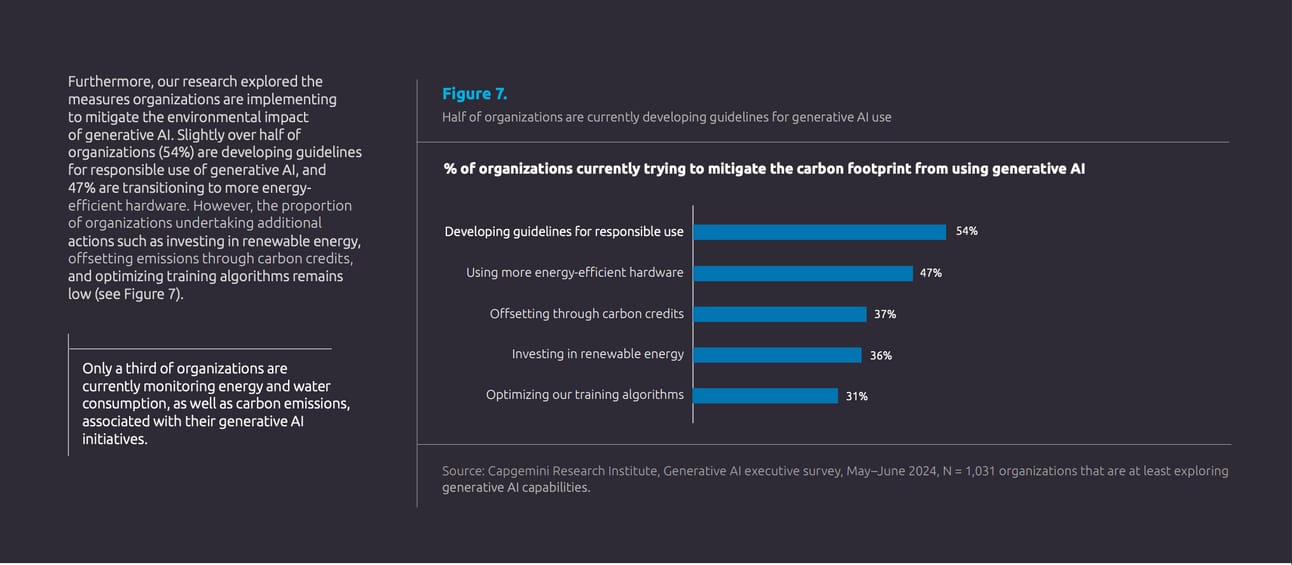

Reducing carbon emissions isn’t just environmentally responsible—it’s smart business. As AI's energy demands grow, optimizing CPU usage and designing efficient architectures are key to maximizing ROI while minimizing environmental impact. It’s a win-win for your bottom line and the planet.

Executive Summary

The Capgemini Research Institute's latest report reveals how generative AI is transforming industries and business functions, with organizations accelerating adoption and investment. Generative AI has evolved from exploratory pilots to tangible applications, delivering measurable productivity gains and reshaping business strategies. With sectors like retail, pharma, and high-tech leading the charge, the future of AI is characterized by innovation, efficiency, and the rise of AI agents. However, organizations must address challenges in data governance, ethics, and cybersecurity to harness its full potential.

Key Takeaways

1. Generative AI Adoption is Accelerating

Investment in generative AI has surged, with 80% of organizations increasing their budgets. The adoption rate for enterprise functions, such as IT and marketing, has climbed significantly, with 24% of organizations now deploying generative AI across multiple areas—up from 6% in 2023.

2. AI Agents Signal a New Frontier

AI agents are emerging as autonomous tools capable of executing complex tasks, with 82% of organizations planning adoption within three years. These agents promise enhanced automation, productivity, and innovation across domains, from customer service to healthcare.

3. Challenges Demand Strategic Action

To scale generative AI successfully, organizations must establish robust data governance frameworks, address ethical concerns like bias, and mitigate cybersecurity risks. Investing in talent development and adopting platforms to manage AI use cases will be critical for long-term success.

This report positions generative AI as both a transformative force and a strategic imperative for forward-thinking enterprises.

Your daily AI dose

Mindstream is the HubSpot Media Network’s hottest new property. Stay on top of AI, learn how to apply it… and actually enjoy reading. Imagine that.

Our small team of actual humans spends their whole day creating a newsletter that’s loved by over 150,000 readers. Why not give us a try?

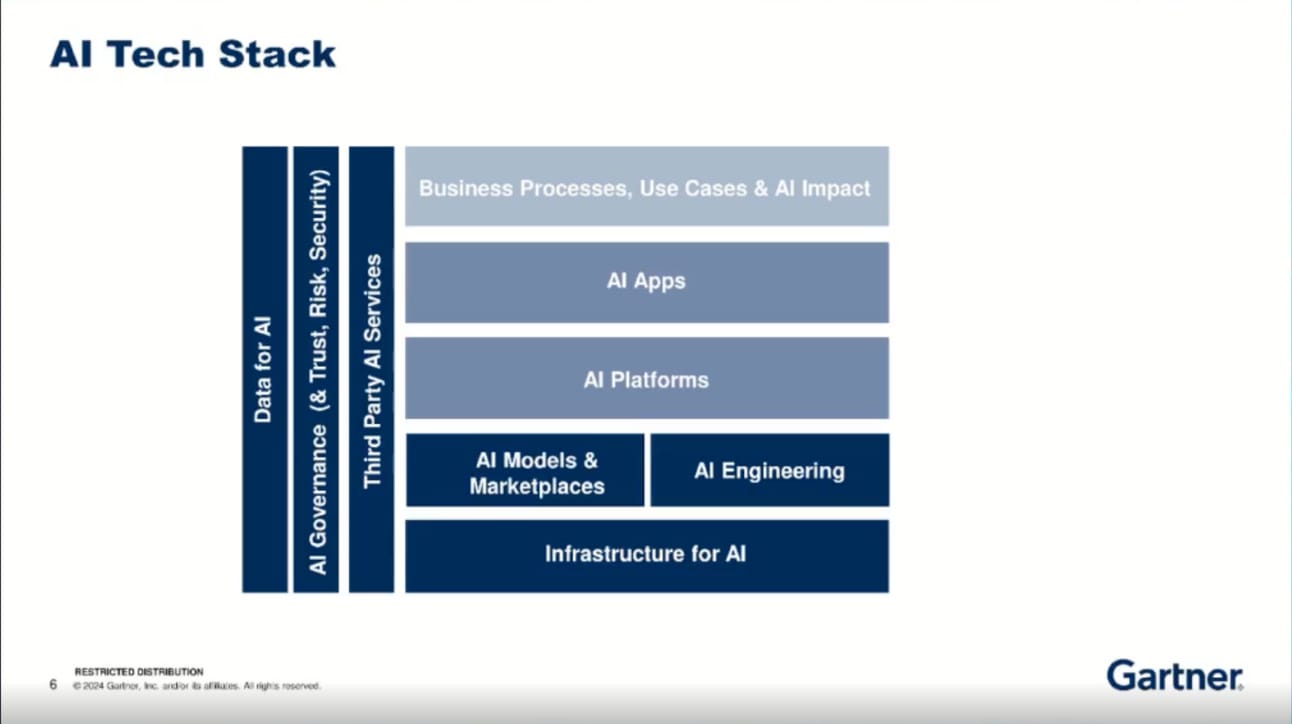

Bits: 🚀 75% of CIOs are ramping up their AI budgets for 2024! But here’s the million-dollar question: is your organization truly prepared to extract real value from AI and generative AI (GenAI)?

Bytes: It’s time for CIOs and IT leaders to step up as architects of AI success. This Gartner webinar is your cheat sheet to understanding how to lay a solid foundation for AI’s transformative potential. You’ll gain insights on:

Tech Readiness Check: Is your infrastructure ready to support AI-driven initiatives and ensure scalability?

Value Mapping with AI & GenAI: Learn how to identify and quantify the tangible benefits AI can bring to your organization.

CIOs as AI Visionaries: Discover the pivotal roles CIOs play in steering their enterprises toward AI-driven success.

🔥 Ready to lead your organization into the AI-driven future? Don’t miss this exclusive Gartner session!

Friday Funnies 🤣 Finish the year strong with a good year end Arse kicking. Don’t know why, but saying Arse just makes me smile.

Uber has consistently been at the forefront of AI innovation. With its Michelangelo ML platform and focus on scalable frameworks, the company sets a strong example of how enterprises can harness AI effectively. While every organization will carve its unique path, those that look to trailblazers like Uber for inspiration and best practices are poised for success in building impactful AI systems.

Executive Summary

Uber's cutting-edge Prompt Engineering Toolkit streamlines the creation, iteration, and deployment of highly effective prompts for Large Language Models (LLMs). By centralizing tools and best practices, Uber enables teams to design precise prompts, integrate contextual data, and monitor performance seamlessly. With robust evaluation frameworks and safety measures, this toolkit empowers users to unlock the full potential of LLMs while ensuring responsible AI usage.

Key Takeaways

End-to-End Prompt Engineering Excellence

A structured lifecycle supports prompt design from ideation to production with iterative testing and evaluation.

Integration of advanced techniques like Chain of Thought (CoT) and Retrieval-Augmented Generation (RAG) enhances prompt accuracy and relevance.

Seamless User Experience

The GenAI Playground simplifies LLM exploration and prompt testing via an intuitive interface with real-time model experimentation.

Auto-prompting tools and reusable templates save time and enable even non-experts to craft effective prompts efficiently.

Robust Production Monitoring

Version control, tagging, and daily performance metrics (e.g., accuracy, latency) ensure high-quality deployments.

Batch and real-time use cases, such as rider name validation and support ticket summarization, demonstrate scalable impact across Uber’s operations.

The Bottom Line

Uber's toolkit is a masterclass in prompt engineering, showcasing how to operationalize AI efficiently and responsibly. It’s a game-changer for organizations seeking to harness LLMs at scale with minimal overhead and maximum impact. 🚀

Authors - Manoj Sureddi, Hwamin Kim, Sishi Long

Here’s Why Over 4 Million Professionals Read Morning Brew

Business news explained in plain English

Straight facts, zero fluff, & plenty of puns

100% free

Executive Summary

Two years since its launch, OpenAI’s ChatGPT has become a defining force in AI, reshaping workflows and driving innovation across industries. With 92% of Fortune 500 companies adopting it, ChatGPT is both a productivity booster and a driver of wealth for major tech firms, adding $8 trillion in market value to the largest players.

Executive Summary

Two years since its launch, OpenAI’s ChatGPT has become a defining force in AI, reshaping workflows and driving innovation across industries. With 92% of Fortune 500 companies adopting it, ChatGPT is both a productivity booster and a driver of wealth for major tech firms, adding $8 trillion in market value to the largest players. However, its journey is far from smooth, with rising competition, new scandals, and geopolitical challenges signaling an evolving and more complex AI landscape.

Key Takeaways

1. ChatGPT: Transformative and Evolving

From advanced voice interaction to vision-based capabilities, ChatGPT’s feature set has grown rapidly, complemented by dedicated enterprise solutions and constant LLM upgrades.

Over 200 million active users showcase its widespread adoption, yet rising competition from models like DeepSeek and Alibaba’s Marco-o1 highlights the fast-paced innovation in AI.

2. Competitive and Controversial Terrain

OpenAI faces increasing competition from global players and decentralized ecosystems, with challengers focusing on reasoning, multimodality, and seamless integrations.

Controversies like the Sora video model leak and Musk’s lawsuit alleging monopolistic practices raise questions about OpenAI’s transparency and market dominance.

3. The Future of AI is Unwritten

OpenAI’s upcoming o1 model launch may redefine its dominance, but the trend toward task-specific models opens doors for new players.

Ethical and geopolitical dynamics, including equitable collaboration and AI adoption by government agencies, will play a critical role in shaping the path forward.

The Bottom Line

ChatGPT’s two-year journey illustrates the double-edged sword of generative AI: groundbreaking innovation paired with intensifying challenges. As the AI race accelerates, the balance between growth, fairness, and accountability will define its long-term impact.

Author - VentureBeat

Until next time, take it one bit at a time!

Rob

Thank you for scrolling all the way to the end! As a bonus check out Lewis Walkers LinkedIn post

P.S.

Join thousands of satisfied readers and get our expertly curated selection of top newsletters delivered to you. Subscribe now for free and never miss out on the best content across the web!