Welcome to this week’s 8 Bits for a Byte newsletter, where we explore how AI is transforming industries, from cybersecurity to data quality, and the ever-growing influence of Big Tech. This edition is packed with insights on leveraging AI to secure your business, manage data effectively, and navigate the complex AI landscape dominated by tech giants. Ready to stay ahead of the curve and lead the way in AI innovation? Dive into the details, and let’s unlock the future together.

Fuel your mind with top-notch insights. Refined scours the world to bring you free newsletters that are sure to brighten your day. Seriously, these are gems you don’t want to miss. Give it a look—you’ll be glad you did!

And hey, if you love what you find, You can buy me a coffee as a thank you! ☕

The Future of AI in the Enterprise - Project, Governance and Beyond

Discover the Future: Engage with AI innovators as they share their journey, unveiling breakthroughs and practical wisdom.

Build Connections: Make friendships and forge new business relationships in a community passionate about technology's potential.

Join us for an unforgettable night of learning, networking, and reconnection. Your presence will contribute to a vibrant community eager to explore the endless possibilities of AI.

Let’s Get To It!

Welcome, To 8 bits for a Byte!

Here's what caught my eye in the world of AI this week:

While this article comes from NVIDIA, it's essential to highlight the positive potential of AI. We need to embrace the mindset that AI is simply a tool—one that we will master. The cup is half full, and for every challenge, there’s a solution waiting to be found. Adopting a mindset of education and empowerment around AI is crucial as your company transitions to an AI-First Culture. By focusing on what AI can do for your organization, you're not just keeping up—you’re leading the way.

Executive Summary

Generative AI offers immense potential for business transformation, but it also introduces significant security risks. This article outlines three key ways AI can be harnessed to mitigate these risks, creating a "flywheel of progress" where AI not only advances but also safeguards its own implementation. By implementing AI guardrails, detecting sensitive data, and reinforcing access controls, organizations can protect themselves against threats associated with large language models (LLMs) while capitalizing on the benefits of AI.

Key Takeaways:

AI Guardrails for Prompt Injection Protection:

AI guardrails are crucial for preventing malicious prompt injections that can disrupt LLMs or expose data.

Tools like NVIDIA NeMo Guardrails help maintain the security and trustworthiness of generative AI applications.

Sensitive Data Detection and Protection:

AI can automatically detect and obfuscate sensitive information within training data, reducing the risk of inadvertent data exposure.

NVIDIA Morpheus provides an AI framework for identifying and securing sensitive data across vast corporate networks.

Reinforcing Access Control with AI:

Implementing least privilege access controls is essential to prevent unauthorized access through LLMs.

AI models can be trained to monitor and prevent privilege escalation, adding an additional layer of security.

Author -Bartley Richardson

Quote of the week

We’re only scratching the surface with AI, and the opportunities are truly limitless for knowledge workers. I recently spoke with the Head of Partnerships at AI Makerspace , and he shared some exciting news—students who take their courses are landing new jobs, pivoting into fresh careers, and significantly boosting their incomes in under a year. Here’s the best part: seasoned AI experts are still rare, meaning you can make a big leap in your career in a surprisingly short time. Embrace the future now, and watch how quickly things can change for you. Don’t wait—your AI-powered success story starts today!

Barr Moses writes a concise and impactful article on Data Quality—love the examples! In my career, I’ve seen it time and time again: without clearly defined roles and responsibilities, every issue turns into a one-off, with everyone scrambling to figure out who’s responsible for fixing it.

Executive Summary:

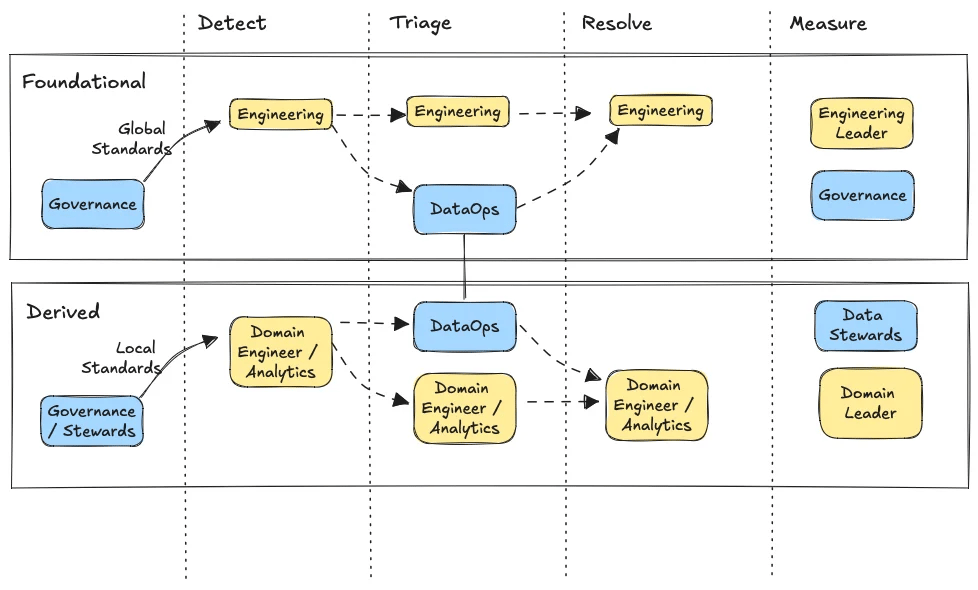

In any enterprise, data quality management is not just a marathon—it's a relay race that requires precise handoffs to ensure success. This article outlines a practical framework for defining data ownership across teams, emphasizing the importance of clearly delineated roles in managing both foundational and derived data products. By focusing on key data products and assigning responsibilities by domain, organizations can move from a fragmented approach to a fully operational data quality strategy.

Key Takeaways:

1. Prioritize Critical Data Products:

Monitoring every data table is impractical; focus on the most valuable data products to maximize impact.

Aligning efforts around critical data, like ML models or insights, ensures that data quality efforts are targeted where they matter most.

2. Distinguish Between Foundational and Derived Data Products:

Foundational data products, typically managed by a central data platform team, serve multiple use cases and require centralized ownership.

Derived data products, built on foundational data, are designed for specific use cases and should be managed by the domain-aligned data teams.

3. Assign Data Quality Responsibilities by Domain and Product Type:

Foundational data products should remain under the control of centralized teams, while derived products require a more nuanced, domain-specific approach.

Define clear roles for detecting, triaging, resolving, and measuring data quality incidents, with dedicated teams handling specific stages of the process.

Operationalizing data quality is challenging, but with the right strategy, tools, and clearly defined roles, your team can ensure reliable and consistent data product performance.

Author - Barr Moses

The Strategic Vision document describes the AISI’s philosophy, mission, and strategic goals. Rooted in two core principles—first, that beneficial AI depends on AI safety; and second, that AI safety depends on science—the AISI aims to address key challenges, including a lack of standardized metrics for frontier AI, underdeveloped testing and validation methods, limited national and global coordination on AI safety issues, and more.

The AISI will focus on three key goals:

Advance the science of AI safety;

Articulate, demonstrate, and disseminate the practices of AI safety; and

Support institutions, communities, and coordination around AI safety.

Check out “The United States Artificial Intelligence Safety Institute: Vision, Mission, and Strategic Goals” a great document outlining how the AISI seeks to develop science-based metrics, tools, and guidelines for both government and the public to use to evaluate AI models and systems, mitigate identified risks, and promote safe AI innovation. AISI’s leadership in the science of AI safety will catalyze domestic and international ecosystems, helping to ensure AI’s potential is safely unlocked for ourselves and for generations to come.

Executive Summary:

The U.S., EU, and U.K. have signed the first legally binding international AI treaty, known as the AI Convention. While this treaty marks a significant step toward global AI governance, its impact on U.S. tech companies may be minimal. The agreement outlines principles to protect human rights and promote responsible AI innovation, but lacks enforcement mechanisms, leading to questions about its practical effectiveness.

Key Takeaways:

Global AI Governance Framework:

The AI Convention aims to align countries on AI principles, focusing on human rights, democracy, and the rule of law.

Despite its ambitious goals, the treaty is considered weaker than the EU's AI Act and doesn't introduce new obligations for U.S. companies.

Limited Impact on U.S. Tech Companies:

The treaty's principles align with existing U.S. and European AI policies, suggesting little disruption to current practices.

The lack of enforcement mechanisms may result in minimal impact on AI innovation and competitiveness in the U.S.

Potential Disparities in AI Regulation:

The convention may lead to differing AI regulations between regions, with Europe potentially seeing stricter controls due to the EU AI Act.

Public sector AI applications may face more stringent oversight compared to the private sector, particularly in the U.S.

Author - Marie Boran

Friday Funnies 🤣 .

I just could not help myself.

This article argues that Big Tech holds too much power in the AI industry, and it's crucial to prevent this from leading to negative consequences.

Executive Summary:

The recent OpenAI-Microsoft saga reveals a deeper truth about the AI industry: it’s not the vibrant, competitive ecosystem it may appear to be. Instead, the development and deployment of large-scale AI systems are heavily dependent on Big Tech companies like Microsoft, Amazon, and Google. These tech giants dominate the infrastructure and resources needed to create AI, shaping the field's trajectory and consolidating their power. This concentration of control poses significant risks to innovation, democracy, and market fairness, highlighting the urgent need for robust regulation and transparency.

Key Takeaways:

1. Big Tech's Dominance in AI:

The AI industry is fundamentally reliant on the infrastructure and market reach of tech giants like Microsoft, Amazon, and Google.

Startups and research labs often depend on these companies, either by licensing AI models or leveraging their vast computing resources.

2. Impact of Concentrated Power:

The control exerted by a few tech companies not only stifles competition but also poses risks to democracy, security, and market dynamics.

The OpenAI-Microsoft incident exemplifies how Big Tech uses its dominance to protect its interests, often at the expense of innovation and public trust.

3. Urgent Need for Robust Regulation:

To counteract the concentration of power, aggressive transparency mandates and liability regimes are needed.

Regulations should enforce business separation within the AI stack to prevent Big Tech from leveraging its dominance in infrastructure to control the AI market.

Great summary of the last weeks AI Summit in SF by Armand Ruiz, enjoy.

7 bets we all should make:

1. Stay Agnostic - Avoid the risk of proprietary lock-in by remaining flexible with your choice of LLM providers.

2. Build Unique Value - Add value beyond the models with frontier data procurement, post-training phases, and fine-tuning that develops your IP. It’s about Day 2 to Day 100+—continuously improving, evaluating, and building smarter, probabilistic software.

3. From Cost to Innovation - As LLMs plateau in raw progress, the focus shifts to making AI applicable

4. Higher-Level Services - Developers are seeking high-level services on top of models. The competitive edge lies in delivering rapid innovation, a faster product roadmap, and quicker time to market.

5. The Future of Models - Smaller, more data-efficient models are outperforming the larger ones, driving significant cost savings. Open models are catching up, offering flexibility and cost-effectiveness. The real battle is not just in size but in how well your stack is optimized.

6. Enterprise Lessons - Large models might shine in POCs, but production demands careful optimization. It’s not just about scale; it’s about smarter, more cost-effective deployment.

7. Your Competitive Edge - Your data is your secret weapon. Build custom models that leverage your unique assets, incrementally adding knowledge to outperform larger competitors at a fraction of the cost.

The real opportunity in AI lies in the Application Layer.

In the end, it’s about creating applications that don’t just work — they continuously evolve, adding real-world value in ways we’ve only begun to explore.

These cannabis gummies keep selling out in 2024

If you've ever struggled to enjoy cannabis due to the harshness of smoking or vaping, you're not alone. That’s why these new cannabis gummies caught our eye.

Mood is an online dispensary that has invented a “joint within a gummy” that’s extremely potent yet federally-legal. Their gummies are formulated to tap into the human body’s endocannabinoid system.

Although this system was discovered in the 1990’s, farmers and scientists at Mood were among the first to figure out how to tap into it with cannabis gummies. Just 1 of their rapid onset THC gummies can get you feeling right within 5 minutes!

Share your thoughts about the above advertisement in our feedback section below. Interested in knowing what you think about it.

Until next time, take it one bit at a time!

Rob