Welcome 8 bits for a Byte: Are you leveraging AI's next big wave, or getting swept away by the hype? This week's newsletter cuts through the noise to bring you actionable insights on the open source AI revolution that's transforming enterprise strategy. From Meta's groundbreaking Llama models to practical frameworks for running data science projects, we're arming you with the knowledge to stay ahead. Don't wait to discover why major players are betting big on open source AI and how it could reshape your competitive edge. Must-read highlights: Meta's Open Source AI Dominance | Salesforce's AI Platform Strategy | Amazon's AI Agent Architecture | Data-Driven Project Management | The Future of AI Applications.

We value your input! Take just a minute to fill out our short survey and help shape the future of AI Quick Byte. Your feedback is essential for creating content you love.

Proxy, the AI Agent for Everyday Life

Imagine if you had a digital clone to do your tasks for you. Well, meet Proxy…

Last week, Convergence, the London based AI start-up revealed Proxy to the world, the first general AI Agent.

Users are asking things like “Book my trip to Paris and find a restaurant suitable for an interview” or “Order a grocery delivery for me with a custom weekly meal plan”.

You can train it how you choose, so all Proxy’s are different, and personalised to how you teach it. The more you teach it, the more it learns about your personal work flows and begins to automate them.

Let’s Get To It!

Welcome, To 8 bits for a Byte!

Here's what caught my eye in the world of AI this week:

The open source revolution in AI isn’t just on the horizon—it’s already changing the game. The days of open source being a side player are over; backed by giants like Meta, it's now a force enterprises can’t afford to ignore. With Meta’s Llama models gaining traction and platforms like Hugging Face’s HUGS making these technologies more accessible, open source AI is redefining competition and creating opportunities for businesses to seize control over their tech. If Meta continues on this path, it could become a major moneymaker, driving innovation that reshapes both internal operations and customer-facing solutions.

Executive Summary

The landscape of technology is shifting as open source AI surges from a niche topic to a major force, challenging proprietary tech's dominance. Once overshadowed by stories of corporate battles between Apple and Microsoft or mobile giants like Apple and Google, open source is now center stage with significant developments. Meta's Llama models and other open source initiatives are driving enterprise interest, offering cost-effective, ownership-friendly alternatives to proprietary solutions. The rise of platforms like Hugging Face’s HUGS and models like Genmo's Mochi 1 highlights the potential for open source AI to reshape industries, elevate competition, and redefine business strategies focused on consumer value.

Key Points

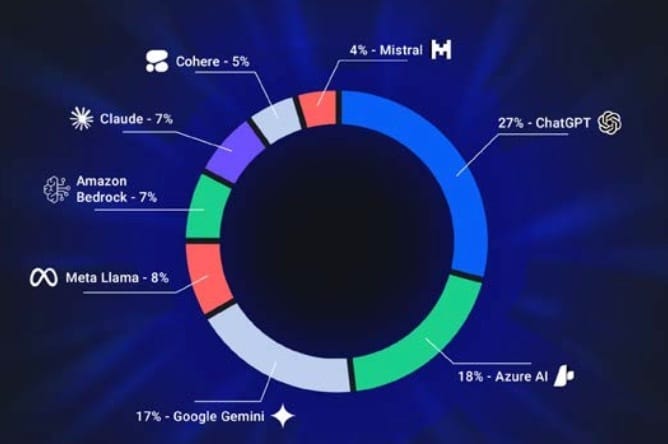

1. Open Source AI Gains Ground: Major open source AI models such as Meta's Llama 3.2 and Mistral's technologies are rapidly being adopted by enterprises looking to reduce API fees and maintain control over their tech. With over 400 million downloads, open source is no longer an underdog but a competitive player.

2. Supportive Ecosystems Are Emerging: Companies like Hugging Face are making open source AI accessible through new services like HUGS, empowering enterprises that lack the infrastructure to adopt and deploy open source solutions without heavy investment in hardware.

3. Market Impact and Future Trends: The growth of open source AI could pressure proprietary leaders like OpenAI to justify their premium pricing. Businesses positioned to thrive will be those that create application layers or "wrappers" around these models, making the technology usable and marketable to consumers.

Outlook

This trend signals a positive shift for innovation, with competition driving better consumer options and potentially lowering costs. However, it remains crucial to watch how businesses evolve to harness open source AI effectively.

Additional Article:

Quote of the week

My thoughts of quote - You always remember your first! Deming was the first Management Guru that I read. He has had a profound influence on my approach to business and management.

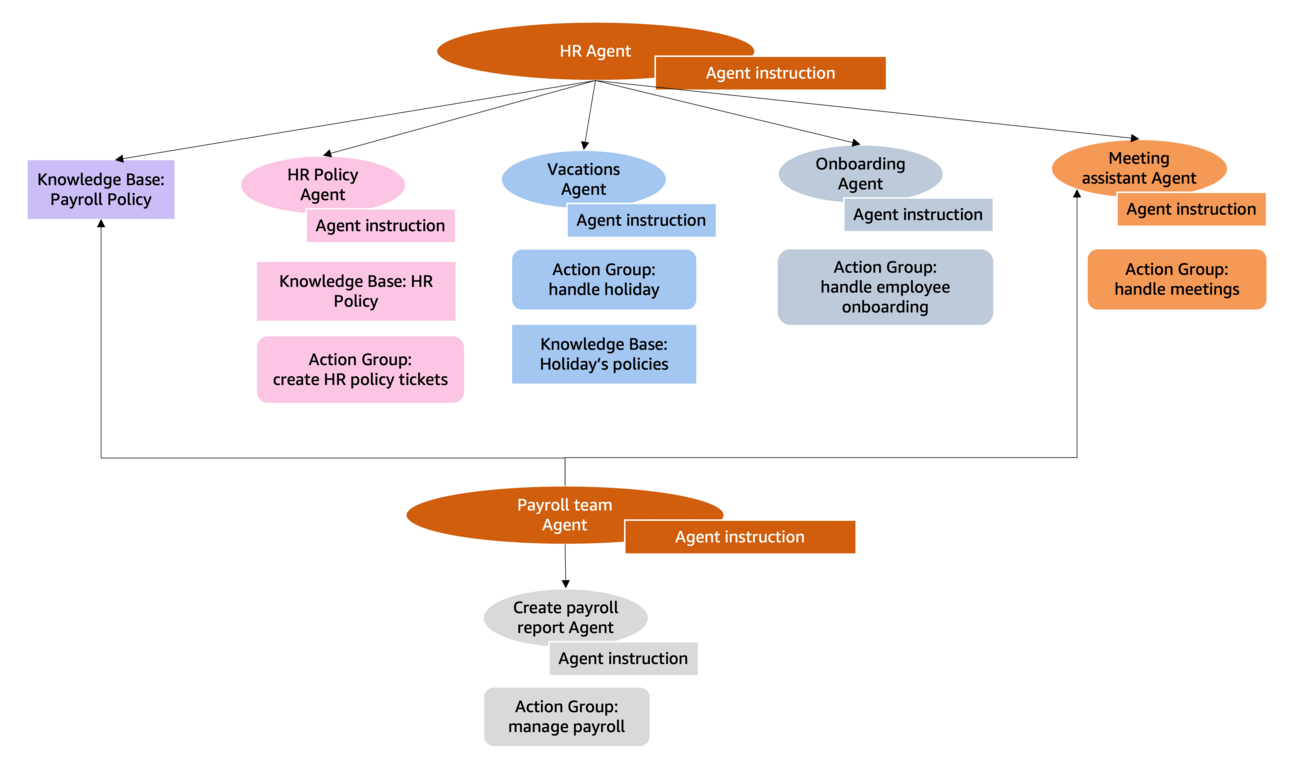

As I dive deeper into creating a Responsible AI education course for an AI-First education platform (exciting announcements coming soon!), one thing is crystal clear: behind the “magic” of AI lies meticulous architecture, thoughtful design, and precise data labeling. Amazon’s recent article demystifies the process of building robust generative AI applications, offering clear, actionable insights. Whether you use their platform or not, it’s packed with practical knowledge.

Executive Summary

This article shares best practices for creating AI agents that are accurate, reliable, and user-centric. Starting from gathering high-quality ground truth data to defining agent scope, ensuring modular design, and continuously refining performance, these steps are essential for success. By following these guidelines, developers can build scalable, intelligent agents that integrate seamlessly with enterprise knowledge and deliver exceptional user experiences.

Key Points

1. Ground Truth Data as a Foundation: Collecting and maintaining high-quality, diverse datasets is crucial for training reliable AI agents. These datasets should reflect real-world interactions, ensuring comprehensive benchmarks and helping to identify edge cases. Regular updates based on user behavior are key for continuous improvement.

2. Clear Scope and Modular Design: Clearly defining what an AI agent can and cannot do helps streamline development and improve reliability. Using a modular, multi-agent architecture improves maintainability and scalability, allowing small, focused agents to collaborate effectively without duplicating functions.

3. User Experience and Ongoing Evaluation: The agent’s tone, clarity of instructions, and integration with enterprise knowledge bases shape the user experience. Continuous testing, including human evaluation and A/B testing, ensures that agents remain accurate, engaging, and capable of handling complex tasks.

Authors - Maira Ladeira Tanke, Mark Roy, Navneet Sabbineni, and Monica Sunkara

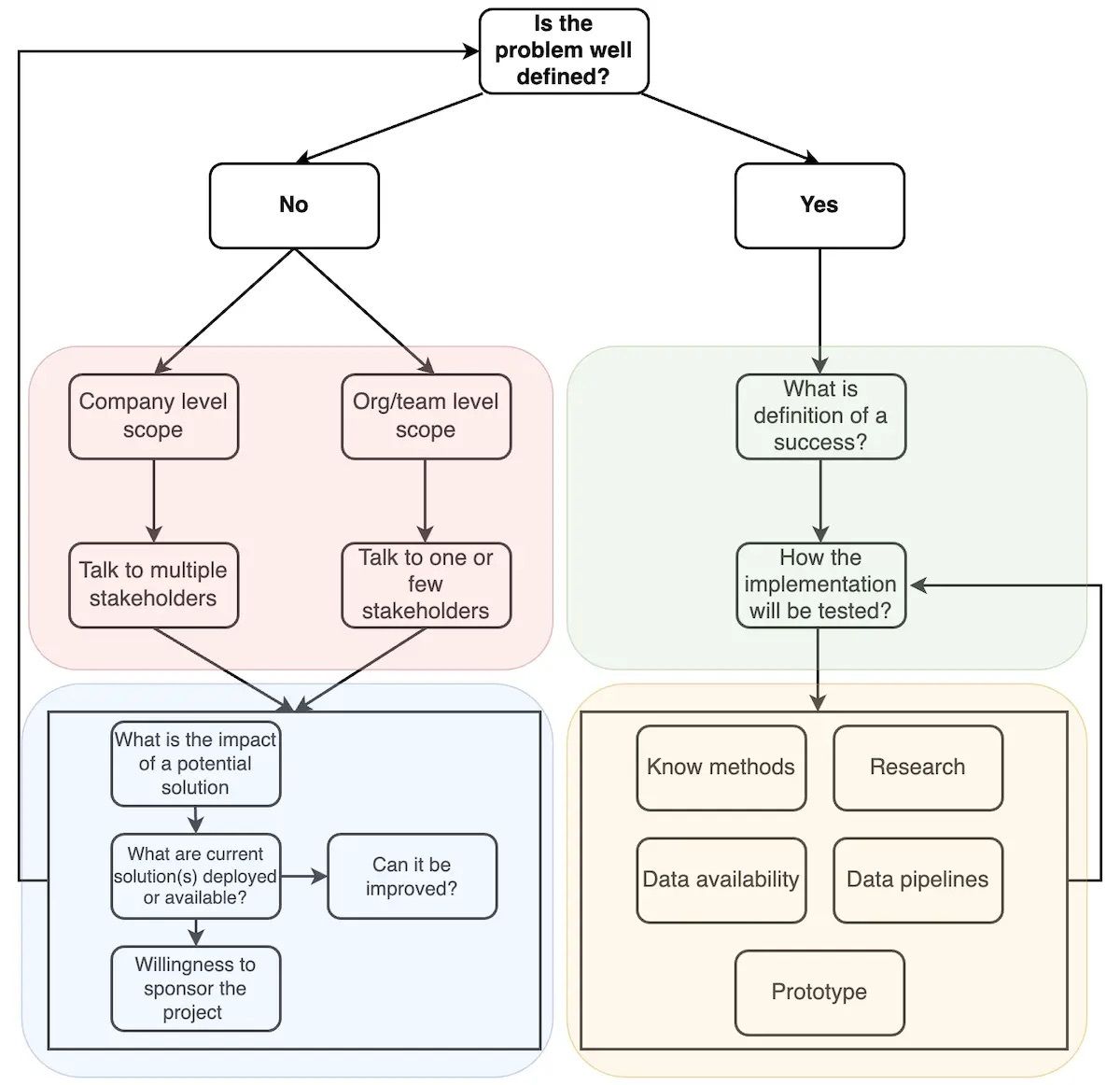

It’s surprising how the simplest frameworks often yield the most effective results in data science projects. Having witnessed poorly managed teams and misguided project approaches firsthand, I can confidently say that the straightforward model shared in the article is both essential and deceptively powerful. Mark Twain’s words, “I didn’t have time to write a short letter, so I wrote a long one,” come to mind—distilling something down to its core is a feat that takes iterations. Starting data science projects with this clear, strategic foundation would vastly improve project ROI and lead to greater long-term success.

Executive Summary

Running successful data or applied science projects requires a strategic approach that goes beyond technical execution. With over nine years of experience at AWS and Amazon, the author outlines a comprehensive model that emphasizes the importance of well-defined business problems, effective stakeholder management, precise scoping, and clear success metrics. This framework helps avoid common pitfalls and ensures that science projects deliver impactful, scalable results.

Key Points

Start with Clear Problem Definition: The foundation of any successful project is a well-defined business problem. Without this clarity, projects risk becoming aimless or ineffective. Engaging stakeholders early to outline tangible, profit-driving objectives is essential to set the stage for success.

Scoping and Stakeholder Alignment: Identifying stakeholders and scoping projects with high ROI ensures prioritization of impactful work. Projects must be evaluated for existing solutions, resource needs, and long-term commitment from stakeholders to avoid roadblocks during execution.

Metrics and Continuous Engagement: Defining success metrics before project kickoff is crucial. These should align with stakeholder expectations and include plans for thorough testing and clear baseline comparisons. Regular check-ins and stakeholder involvement throughout execution ensure adherence to goals and readiness for real-world application.

This approach provides a stable blueprint that balances planning, execution, and flexibility to navigate challenges, setting the stage for scientifically grounded, business-aligned results

Author: Dzidas Martinaitis

Benioff underscores the importance of a strong data foundation combined with metadata and security models. He explains that without quality data, even the best AI tools fall short

As I often say, data is the new sexy, and Marc Benioff’s insights in this interview only reinforce that truth. If you haven’t been prioritizing your data strategy, now is the moment to get serious. Salesforce's focus on a robust, integrated platform shows that true AI success is built on quality data and smart implementation—not hype. So, let’s cue up that Justin Timberlake track and make data the star of your strategy!

Executive Summary

In a compelling interview, Salesforce CEO Marc Benioff discusses his career journey, the genesis of Salesforce, and the company's current AI-driven initiatives. Central to the conversation is Agentforce, Salesforce’s new wave of AI agents, which Benioff believes will redefine enterprise operations. He critiques the overhype around LLMs (large language models) and stresses the importance of integrating AI thoughtfully to enhance customer experiences and business KPIs. Benioff's insights highlight Salesforce's strategic advantage in AI and how a robust platform approach positions the company for continued leadership.

Key Points

1. Agentforce and AI’s Next Wave: Benioff is more excited than ever about AI, especially Agentforce, which integrates an "agentic layer" on top of existing Salesforce tools. He sees this as a transformative step that automates and augments human workflows, boosting productivity and customer relationships in ways previously unimaginable.

2. Critique of AI Hype: Benioff challenges popular narratives around LLMs and certain AI tools, like Microsoft’s Copilot, which he views as ineffective. He warns against AI's "false prophets" who oversell capabilities without delivering true value, advocating instead for AI built into platforms that yield practical, impactful outcomes.

3. Data as the Foundation for AI Success: Salesforce’s deep data integration gives it a strategic edge. Benioff underscores the importance of a strong data foundation combined with metadata and security models. He explains that without quality data, even the best AI tools fall short, positioning Salesforce’s approach as holistic and unparalleled in enabling accurate, reliable AI solutions.

This discussion underscores that the future of enterprise AI isn’t just theoretical; it’s actionable, rooted in effective integration, and requires a trusted platform and data to succeed.

Author - Ben Thompson

Automate Calls and Boost Conversions with AI Voice Assistants

Set up an AI receptionist (on 24/7) or an outbound lead qualifier (#Speedtolead). Book appointments, transfer calls, and extract info seamlessly. Integrates with HubSpot, GohighLevel, and more. Deploy in minutes, no coding needed.

Friday Funnies - I have always had a passion for data and I am so happy to say data is back and sexy! 🤣 .

[Testing] can drastically reduce the number of bugs that get into production… But the biggest benefit isn't about merely avoiding production bugs, it's about the confidence that you get to make changes to the system.

The best organizations I've worked with prioritize solid test coverage in critical areas to guarantee quality and stability. Without it, releases can feel like a nerve-wracking gamble, with every rollout a tense, high-stakes moment. Now, imagine having the confidence to deploy on a weekend—unthinkable in my experience! But with the newfound ease of creating robust tests using LLMs, I predict we’re on the verge of a major shift. This game-changing approach will transform uptime and make smooth, non-eventful releases the norm.

Executive Summary

Leveraging Large Language Models (LLMs) to automate the creation of comprehensive test suites has significantly boosted the efficiency and quality of software development. By using LLMs, engineers can draft thorough, reliable tests in minutes, which maintains code integrity and accelerates feature development. This practice results in higher productivity, cleaner codebases, and increased development speed without sacrificing quality.

Key Points

1. Accelerated Test Creation: LLMs like OpenAI’s o1-preview and Anthropic’s Claude 3.5 Sonnet enable engineers to generate robust unit tests swiftly. This can transform testing from a time-consuming task into one that fits seamlessly into a fast-paced development cycle, making it easier for teams to maintain consistent testing practices.

2. Enhanced Productivity and Code Quality: With the speed and ease of generating tests using LLMs, engineers can focus more on core development activities. This shift leads to cleaner, safer code and reduces the likelihood of skipped testing due to time constraints, improving the overall quality of the software.

3. Best Practices for Effective LLM Integration: To maximize the benefits, teams should review and refine LLM-generated tests, customize prompts for their specific needs, and provide strong examples that reflect their codebase’s style. Iterative checking ensures that generated code meets standards, covers essential edge cases, and functions as intended.

Conclusion

Using LLMs for generating tests is a game-changer for engineering teams, reducing the time needed to create comprehensive test suites and fostering a more robust development workflow. This approach not only maintains high code quality but also keeps development agile and responsive.

Author - John Wang

Your voice matters, and your words can ignite change! I’d love your support in sharing a testimonial — whether written or video — about your experience with AI Quick Bytes. Your feedback goes a long way in helping grow our community, a space built with passion, hard work, and a shared love for all things AI.

As a thank-you, those who share a testimonial will receive an exclusive invitation to a FREE, no-pitch one-hour webinar, "Unlock AI Strategic Leadership Techniques For Immediate Impact." This is your chance to get direct, personalized insights as I answer every question you have about AI leadership.

Here’s a preview of what you’ll gain:

Demystifying AI Leadership: Discover what it truly means to lead successful AI-driven projects, breaking down the complexities with ease.

Essential Skills for Success: Learn the key skills that separate a competent manager from a visionary AI leader, whether in product, program, or project management

AI Success Life Cycle: Understand how to take AI from discovery to production and learn the strategies to scale effectively.

This session is tailor-made for C-suite executives, Business Leaders, Founders, Consultants, and Experienced project, program, and product managers. If you've been building software but aren't quite sure how to confidently integrate AI, this is your opportunity to gain the clarity and insights you need.

Whether you’re looking to pivot your career or elevate your current role, this FREE session is your gateway to the future of AI leadership. Join us and take the next step in your AI journey!

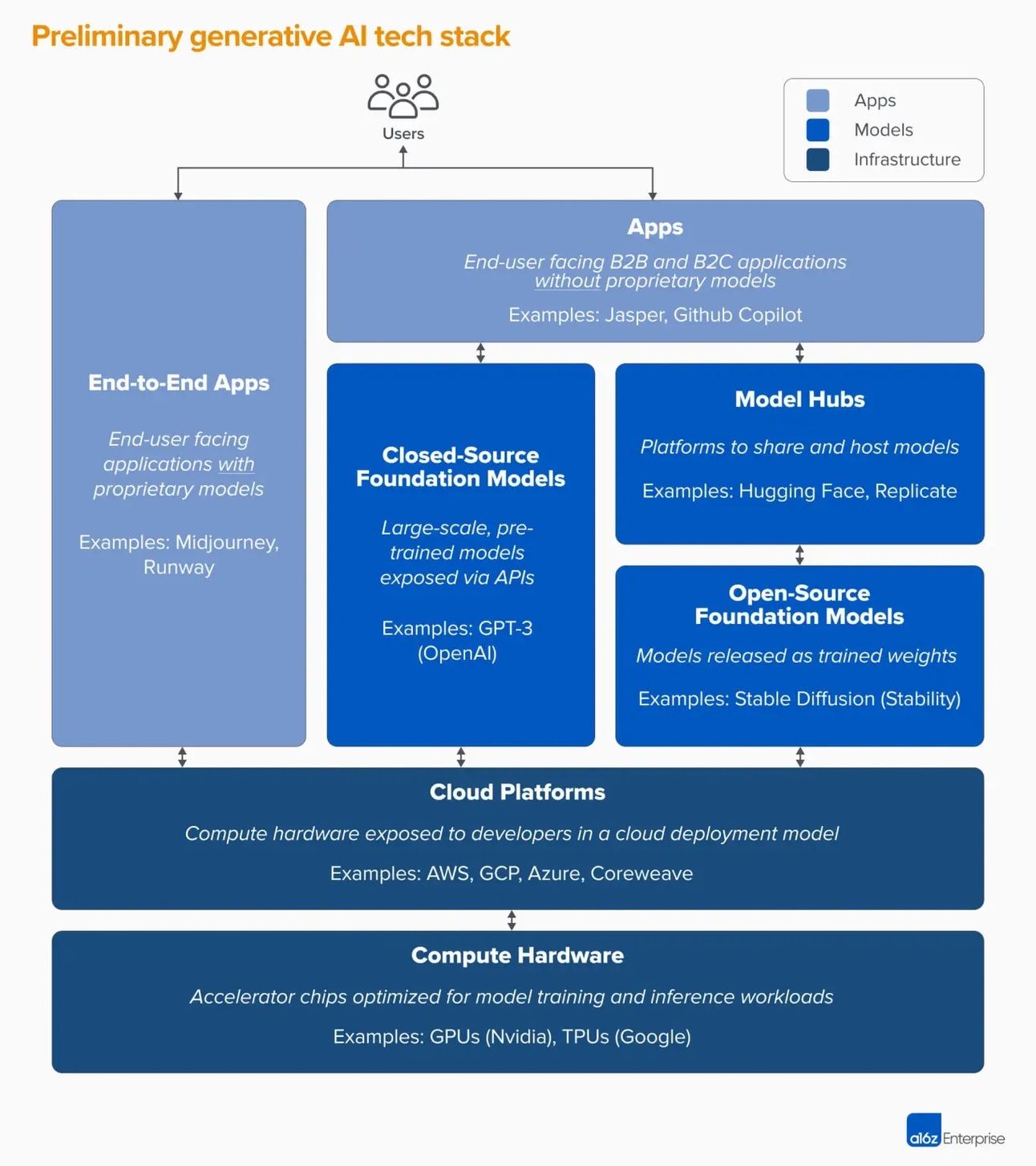

While many investors continue to chase the allure of AI infrastructure, I've come to realize that the true magic of generative AI lies within its applications—where innovation meets real-world impact. The infrastructure space, ruled by tech behemoths with their deep pockets and rapid advancements, poses immense challenges for startups. However, the application layer is a goldmine of opportunity, especially on the consumer side, where startups can thrive by creating unique, personalized user experiences and capture a goldmine of data to continuously improve and differentiate themselves. This is where agile innovation, customer ownership, and fast go-to-market strategies come into play, redefining how AI enhances everyday life.

Executive Summary

While significant investment continues to flow into the infrastructure layer of AI, the real potential for innovation lies in the application layer, especially consumer-facing apps. With giants like Microsoft, Google, and Amazon dominating AI infrastructure through massive R&D investments, startups may find it difficult to compete directly. However, the application layer offers rich opportunities for startups to create unique, user-centric solutions that leverage existing infrastructure, reshaping industries from finance to entertainment.

Key Points

1. The Power Shift to Applications: Startups should focus on developing consumer applications that harness Gen AI to deliver new, personalized user experiences. These include AI-driven content creation, gaming, and healthcare solutions. Unlike the heavily contested infrastructure market, the application layer allows for faster go-to-market strategies and novel business models, such as AI-powered services.

2. Owning the Customer is Crucial: The application layer's main advantage lies in owning user data and customer relationships. This data can be leveraged to refine offerings and build a competitive edge, fostering deeper personalization and loyalty. Startups can establish strong moats by prioritizing user data ownership and continuous value delivery.

3. Challenges and Strategic Considerations: While building on existing Gen AI platforms accelerates development, startups face platform risk from big tech expanding their services. Founders should carefully assess how much unique value they add beyond the capabilities of these platforms and ensure they innovate quickly to stay ahead.

Conclusion

Big tech's infrastructure dominance means startups should focus on creative applications that offer direct consumer value, using existing Gen AI tools to minimize costs and iterate swiftly. This strategic pivot echoes the shift seen in the mobile era, where true disruption occurred at the application level.

Author - Kevin Baxpehler

Until next time, take it one bit at a time!

Rob

P.S.

Join thousands of satisfied readers and get our expertly curated selection of top newsletters delivered to you. Subscribe now for free and never miss out on the best content across the web!