Welcome to this edition of AI Quick Bytes. In this issue, we dive into the revealing 2024 AI Security Report, explore how "taste" is becoming a key differentiator in Silicon Valley's AI landscape, and examine the latest developments in EU AI regulations. Stay informed and ahead of the curve as we navigate the intersection of AI technology, security, and business strategy together.

Want to get the most out of ChatGPT?

Revolutionize your workday with the power of ChatGPT! Dive into HubSpot’s guide to discover how AI can elevate your productivity and creativity. Learn to automate tasks, enhance decision-making, and foster innovation, all through the capabilities of ChatGPT.

Quick bits

🧩 Strategy: 2024 State of AI Security Report

📊 Trends: Winning the AI Wars will take good Taste

🛠️ Training & Tools: Meta, Spotify Reportedly Criticize AI Regulation In EU

Love helping friends get jobs? Now you can get paid to do it

Earn referral bonuses by sharing tech jobs with your network. Sign up for Draftboard today and start earning up to $10,000 per successful referral!

Welcome, To 8 bits for a Byte!

Let’s Get To It!

🧩 Strategy

As AI adoption accelerates across industries, the security of these advanced systems is coming into sharp focus. The 2024 AI Security Report from Orca Security dives deep into the key risks and vulnerabilities organizations face while deploying AI models, offering valuable insights for IT leaders and security professionals.

Executive Summary:

This report highlights the rapid adoption of AI models by over 50% of organizations and reveals significant security challenges associated with AI deployment in the cloud. The default settings, often designed for development speed, compromise security and expose organizations to risks. Additionally, vulnerabilities found in AI packages are predominantly of low to medium risk but could evolve if unaddressed.

Key Takeaways:

1. AI Adoption and Vulnerabilities

Over half (56%) of organizations deploy custom AI models, with Azure OpenAI leading cloud AI services. However, 62% of these models contain vulnerabilities, mostly rated low to medium, but still posing a potential long-term threat.

2. Security Risks in Default Configurations

A staggering 98% of Amazon SageMaker notebook instances still use default root access, and nearly half (45%) of SageMaker buckets retain non-randomized names, exposing them to data manipulation and breaches.

3. Emerging AI Threats

AI services are susceptible to evolving risks, including model theft, input manipulation, and AI supply chain attacks. Orca's research aligns these threats with OWASP’s top 10 machine learning risks, signaling the need for proactive security measures.

OWASP (Open Web Application Security Project) has identified a Top 10 Machine Learning Security Risks list to highlight the vulnerabilities and threats specific to machine learning (ML) systems. These risks emphasize the unique security challenges associated with AI/ML models and applications.

Here’s a breakdown of OWASP’s Top 10 Machine Learning Security Risks:

1. Data Poisoning

Attackers manipulate the training data to corrupt or skew the model’s learning process, leading it to make incorrect or harmful decisions.

2. Model Inversion Attack

Attackers reverse-engineer a model to extract sensitive information about its training data, even if they don’t have direct access to the data.

3. Membership Inference Attack

An adversary determines whether a specific data point was part of the model’s training set, potentially exposing private or sensitive data.

4. Model Theft

Unauthorized access to the model's architecture, parameters, or training data allows attackers to steal or replicate the model for malicious purposes.

5. AI Supply Chain Attacks

Compromising the integrity of a machine learning library, model, or a third-party package introduces vulnerabilities and allows the injection of malicious code or logic.

6. Transfer Learning Attack

Attackers manipulate models by using transfer learning (reusing pre-trained models) to implant harmful behaviors in the final application without detection.

7. Model Skewing

Altering the distribution of input data used to train the model results in the model generating biased or incorrect predictions during deployment.

8. Output Integrity Attack

Manipulating the model’s output to force it to deliver incorrect or harmful results to the system or user.

9. Input Manipulation

Adversarial attacks involve modifying input data (e.g., slightly altering images or text) to trick the model into making incorrect predictions.

10. Model Poisoning

Altering the model parameters directly to induce specific misbehaviors or incorrect outcomes, often used to exploit the model in future operations.

These risks underscore the importance of securing every aspect of the machine learning lifecycle—from data collection and model development to deployment and maintenance.

Get your news where Silicon Valley gets its news 📰

The best investors need the information that matters, fast.

That’s why a lot of them (including investors from a16z, Bessemer, Founders Fund, and Sequoia) trust this free newsletter.

It’s a five minute-read every morning, and it gives readers the information they need ASAP so they can spend less time scrolling and more time doing.

📊 Trends

The AI wars have only begun and Taste is going to be the differentiator. Insightful post by Anu Atluru.

Executive Summary:

In today's rapidly evolving tech landscape, software's once-dominant role is shifting as taste emerges as the new frontier in Silicon Valley. No longer is technical prowess enough to dominate markets; products must now combine utility with cultural relevance, emotional resonance, and design excellence. As software commoditizes, taste—defined by aesthetics, user experience, and emotional connection—has become the key differentiator in tech products. This evolution challenges founders and venture capitalists alike to navigate a world where cultural resonance matters as much as technical innovation.

3 Key Takeaways:

1. Taste is the New Competitive Edge:

As software becomes commoditized, "taste"—a combination of design, aesthetics, and emotional connection—is now the key to product differentiation.

Companies like Apple, Tesla, and newer startups like Arc and Linear are leading the way by blending technology with cultural resonance.

2. Founders Must Master More than Code:

Success in today’s market requires founders to understand design, branding, and storytelling, in addition to technical innovation.

Technical expertise is necessary but not sufficient; founders must now shape products that emotionally connect with users and align with cultural values.

3. Investors Are Betting on Taste-Driven Companies:

Venture capitalists are shifting focus, investing in companies that can capture the cultural zeitgeist alongside delivering great products.

It’s no longer just about backing the best technical team—investors look for startups that resonate with diverse, culturally driven markets.

This evolution in Silicon Valley signals a new era where tech and culture are inseparable, and taste is the driving force behind the next wave of innovation.

Transforming an organization - hard, Implementing AI in the organization - Harder. Doing both IMPOSSIBLE. Until now! AI Quick Bytes will help you navigate through it all. The key is adaptability.

Step 1 - Take Action In Your Career!

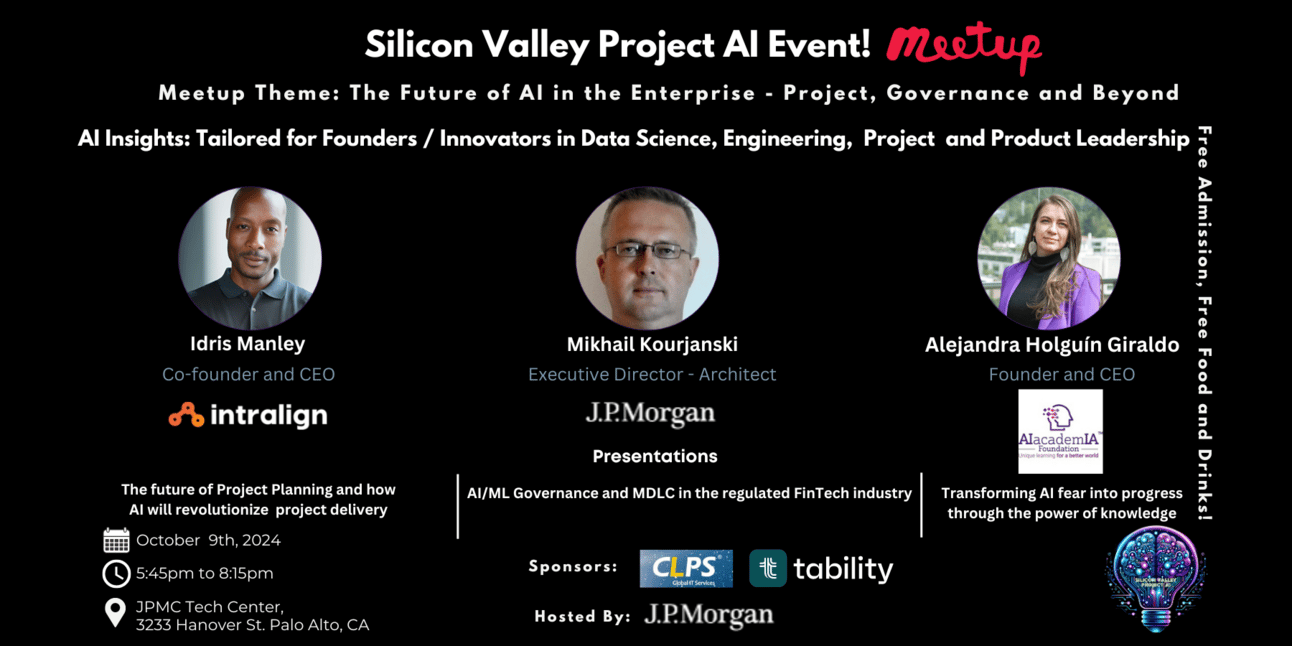

Dream of being a Strategic AI Leader? Will outline the roadmap for your success. You will learn about the different stages of the AI maturity model, the AI success life cycle, and the AI business roadmap. Join us for our upcoming FREE virtual workshop, “Exclusive AI Leadership Insights - Creating a Roadmap For AI Success,” on September 27th from 8 AM to 9 AM PST.

What’s stopping you?

🛠️ Training & Tools

EU Faces Criticism for "Inconsistent" AI and Data Privacy Regulations

The European Union is under fire from tech giants like Meta, Spotify, and industry leaders for its fragmented approach to AI and data privacy. An open letter calls for clearer regulations to foster AI innovation in Europe, which lags behind global competitors.

Key Takeaways:

1. Fragmented Regulations Stifling Innovation

Industry leaders are pushing back against the EU's inconsistent AI regulations, which complicate data usage for AI training and hinder competitiveness in the global AI market.

2. Meta Halts AI Data Collection

Following regulatory uncertainty, Meta paused its plans to use European user data for AI training, reflecting broader industry frustrations with unclear data privacy rules under GDPR.

3. European AI Market Growth

Despite regulatory challenges, Europe’s AI market is projected to grow to $46.7 billion in 2024, although it still trails behind the much larger U.S. AI market valued at $502 billion.

Author - Sana Tahir

Until next time, take it one byte at a time!

Rob

P.S.

💎 Discover Handpicked Gems in Your Inbox! 💎

Join thousands of satisfied readers and get our expertly curated selection of top newsletters delivered to you. Subscribe now for free and never miss out on the best content across the web!