Welcome to this edition of AI Quick Bits. This week, we're diving into Vinod Khosla and Elad Gil's expert takes on AI, exploring responsible AI strategies with real-world examples, and revisiting Steve Jobs' timeless view of AI as a tool and human amplifier (kinda sort of). Plus, discover a fun and engaging prompt to deepen your understanding of complex topics. Let's get started!

Our Sponsor:

Quick bits

🧩 Strategy: Vinod Khosla And Elad Gil takes on AI. My podcasts summary plus links to the not to be missed podcasts.

📊 Trends: Responsible AI Basics with Real-World Examples.

🛠️ Tools: Steve Jobs shares AI is just another tool to amplify mans inherent intellectual ability to free people to do more creative work.

💡Prompts: Fun and Engaging AI Prompt to Learn and Explore.

Let’s Get To It!

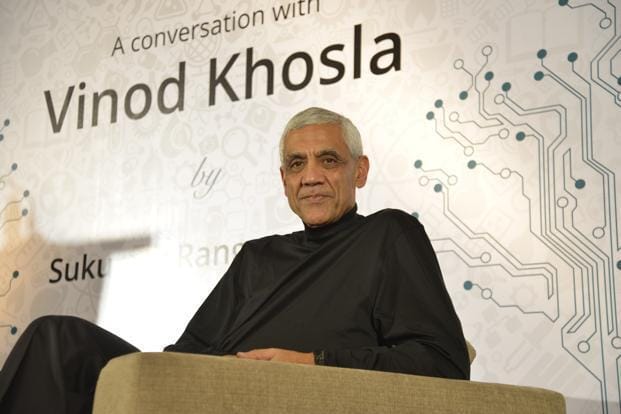

Deeper Bytes

When listening to the More or Less Podcast I became acutely aware of the difference between mere mortals and SV OG’s. Vinod’s AND Elad’s take on the future of AI is enlightening and is a must listen. The ability to get their unfiltered coaching and input is priceless and why I love listening to podcasts with Biz / Tech OG’s who have been super successful. Below are some of my key take aways. For what it is worth The Information has the best Tech / Finance writing around IMHO but it it ain’t cheap brethren, but totally worth it if you want to stay up to date with the latest in Tech and Finance.

Long-Term Vision: Khosla encourages a long-term perspective, suggesting that true AI innovation and its economic benefits will unfold over the next decade.

Vinod Khosla contrasts the creation of large models like those by Microsoft and OpenAI with niche model opportunities by highlighting the following points:

1. Scale and Resources: Large models require significant resources and infrastructure, often making them accessible only to big tech companies.

2. Generalization vs. Specialization: Large models are designed for broad applications, while niche models can be tailored to specific tasks, offering higher efficiency and accuracy in those areas - think RAG and Fine Tuning.

3. Market Dynamics: Niche models present opportunities for startups to innovate and compete by addressing specific needs that large models might overlook.

4. Adoption and Integration: Large models may face challenges in seamless integration into existing workflows, whereas niche models can be more easily adopted for specialized use cases.

5. Economic Viability: Niche models can be more economically viable for smaller companies or industries with unique requirements, avoiding the immense costs associated with developing and maintaining large-scale models.

Elad Gil

I go deep on one topic area, however I highly recommend listening to the entire podcast.

Investment and Adoption Dynamics in AI

Elad Gil delves into the current state and future trajectory of AI investments and adoption, highlighting several key points:

1. Massive Investment by Big Companies:

Large tech companies like Microsoft (through Azure), Google, Amazon (AWS), and others are investing billions of dollars into AI. These investments are seen in their infrastructure, foundation models, and various AI services.

For example, Azure reported a significant revenue lift attributed to AI services, showcasing the immediate financial impact and potential for continued growth in this area.

2. Phases of AI Adoption:

Early Phase: This includes building demos and internal tools, which is the initial stage where companies experiment with AI to improve specific processes or create proofs of concept.

Internal Tooling: Companies are beginning to use AI internally to streamline operations. An example cited is Clariant, which used GPT-4 to replace 700 customer support roles, enhancing efficiency and reducing costs.

External Products: While still emerging, AI-driven products for consumers and enterprises are being developed and show promise for broader adoption.

Enterprise Integration: Many enterprises are currently in the early stages of integrating AI into their operations. This adoption is expected to grow significantly over the next few years as companies become more comfortable with the technology and its benefits.

3. Under and Over-hyped Perspectives:

Elad believes that while there is substantial hype around AI, particularly large language models, the true potential might still be underappreciated because full enterprise adoption is yet to be realized.

Despite the hype, there's already notable revenue and usage growth, indicating genuine value being generated.

4. Challenges with AI Startups:

The majority of AI startups may not succeed, as is typical with any major technological wave. The challenge lies in finding the 1% that will.

Success often depends on identifying real use cases and solving specific problems that add significant value to users.

5. Strategic Investment and Buyouts:

Elad highlights the trend of AI-driven buyouts, where companies acquire AI startups to integrate their technology and enhance their offerings. This strategy can be particularly effective in consolidating and scaling AI innovations within established businesses.

This approach is contrasted with the traditional venture capital model of funding startups through various stages, suggesting a more integrated and strategic investment approach might be emerging.

6. Long-term Impact and Revenue Generation:

- The true economic impact of AI will likely be seen in the next two to three years as enterprise adoption grows.

- The current phase is characterized by rapid experimentation and deployment of AI in various business contexts, leading to incremental but significant improvements in efficiency and productivity.

By understanding these dynamics, investors, entrepreneurs, tech and product leaders can better navigate the evolving AI landscape, identify promising opportunities, and develop strategies that align with the broader trends in AI adoption and investment.

📊 Trends

Responsible AI

There is a lot of conversation about Responsible AI below are Strategies, Examples , Critical Insights, and Next Steps to ensure Responsible AI implementation in your business:

📈 Strategy 1: Establish Clear Ethical Guidelines

Develop a comprehensive AI ethics policy that aligns with your company’s values and objectives.

Ensure transparency in AI decision-making processes and provide clear explanations for AI-driven outcomes.

Example: Google's AI Principles are a great benchmark. They emphasize accountability, transparency, and user privacy.

🔍 Strategy 2: Implement Robust Data Privacy Measures

Prioritize data privacy by incorporating techniques like anonymization and encryption.

Regularly audit your data practices to ensure compliance with regulations such as GDPR and CCPA.

Example: IBM’s approach to data privacy ensures that all AI data processes are compliant with international standards like GDPR.

📋 Strategy 3: Integrate Responsibility into Product Requirements

Include a dedicated responsibility section in your Product Requirement Document (PRD).

Outline ethical considerations, potential biases, and mitigation strategies for each AI feature.

Example: AI Quick Bytes free template for AI PRDs for AI projects include sections on potential biases and ethical implications, ensuring that every AI feature is developed responsibly.

💡 Strategy 4: Foster a Culture of Accountability

Create a cross-functional AI ethics committee to oversee AI projects and address ethical concerns.

Train employees on ethical AI practices and encourage a culture of responsibility and continuous learning.

Example: Salesforce’s Trusted AI Principles help ensure all projects adhere to their ethical standards.

Critical Insight

Most organizations default accountability for AI to IT, or don’t assign accountability at all. Responsible governance requires the business to take accountability for their approach to AI.

Very few organizations have a formal and structured approach to AI governance.

AI can introduce or intensify risks that affect the entire organization, but most organizations haven’t integrated AI risks into their enterprise risk management framework.

Most organizations don’t assign accountability for AI or it defaults to the CIO – and yet authority and true accountability remain with the business.

Policies are published without any controls to monitor and enforce compliance.

Next Steps

Start to govern AI responsibly by following a structured approach:

Identify key risks related to AI.

Identify a set of responsible AI principles.

Create an AI governance structure: identify key governing organizations, their mandate, key roles, and responsibilities.

Design an AI governance operating model.

Evaluate policy gaps using a policy framework.

Use your findings to develop a roadmap and communication plan to govern AI in your organization.

🛠️ Tools

Well, Steve kind of, sort of shared that AI is just a tool. He was talking about the personal computer. What's fascinating is that we're having the same conversations about AI today that we had when personal computers were first introduced. Ignore the hype—AI is just a tool. The more things change, the more they stay the same. It's also important to remember that there have been multiple "AI will change the world" moments that haven't panned out. Do I think this time will be different? Yes. Do I believe all the predictions about AI’s impact will come true? No. As I mentioned earlier, the biggest challenge isn't the technology itself but our ability to adapt to and embrace the changes AI will bring.

Is AI just a tool? What are your thoughts?

💡Prompts

Did you know? Asking an AI to "simulate a conversation between two experts on a topic" can provide diverse perspectives and deeper insights into complex subjects. It's like listening in on a debate between specialists, helping you understand different angles of the topic.

Until next time, take it one byte at a time!