8 bits for a Byte: LinkedIn's CPO just discusses their AI transformation playbook—and it's a masterclass in what actually works at enterprise scale. Not plug-and-play tools. Platform investment. Custom agents. A culture rewrite. Here's the blueprint.

This is exactly why AI is so exciting—and exactly why most companies will get it wrong. New ventures will build this way from day one. Incumbent enterprises? They'll need to conduct a focused, challenging, and revolutionary journey if they want to remain relevant. The question is whether you'll lead that journey or be displaced by someone who did.

Let’s Get To It!

Welcome To AI Quick Bytes!

Bit 1: Your Job Is Changing—Whether You Like It Or Not

LinkedIn has a unique view of the world of work, and their data should keep you awake at night. By 2030—just four years from now—70% of the skills required to do your current job will change. That's not a prediction about future roles; that's a prediction about the role you hold today.

If you're waiting for stability to return, you're waiting for something that isn't coming. The time constant of change now exceeds the time constant of response. Translation: the world is moving faster than most organizations can adapt. The only question is whether you'll still have your seat when the music stops.

Bit: Your job title may survive; your job description won't.

70% of job skills will shift by 2030—your current competencies have a four-year shelf life.

70% of last year's fastest-growing jobs didn't exist the year before—new roles are emerging faster than training programs can respond.

The pace of change now outstrips organizational response times—agility isn't optional; it's survival.

ACTION BYTE: Audit your team's core competencies this quarter. Identify which skills face obsolescence risk and map a 12-month upskilling plan before the market forces your hand.

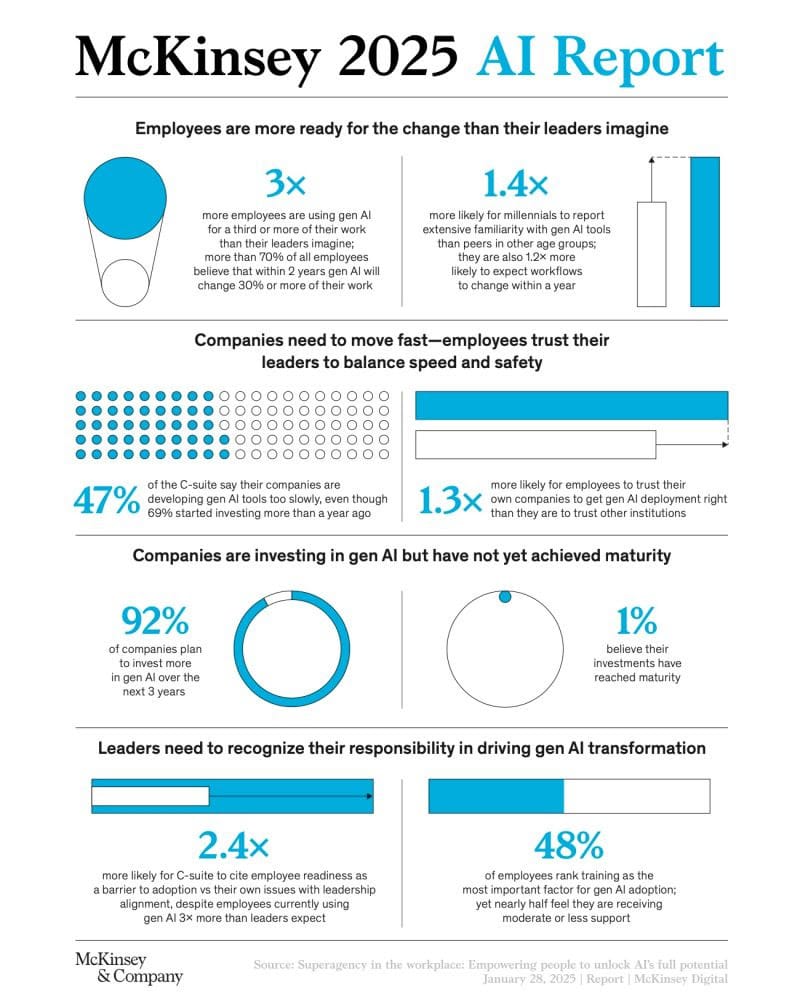

One major reason AI adoption stalls? Training.

AI implementation often goes sideways due to unclear goals and a lack of a clear framework. This AI Training Checklist from You.com pinpoints common pitfalls and guides you to build a capable, confident team that can make the most out of your AI investment.

What you'll get:

Key steps for building a successful AI training program

Guidance on overcoming employee resistance and fostering adoption

A structured worksheet to monitor progress and share across your organization

Bit 2:

Quote of the Week:

Robert Franklin

Bit 3: Navy SEALs, Not Infantry Divisions

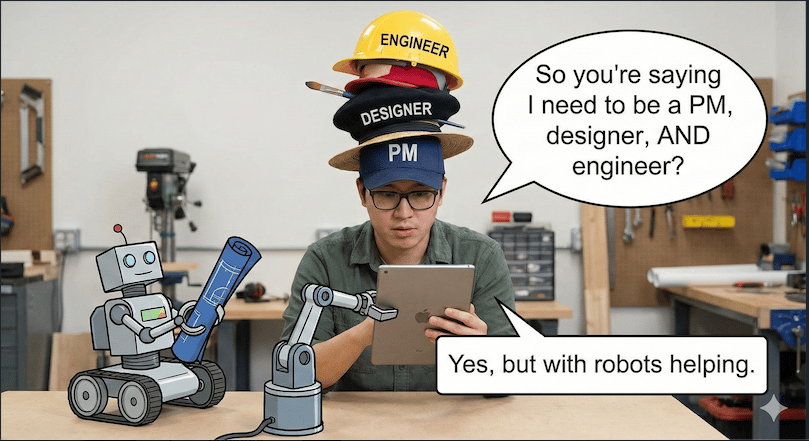

The old model: large, permanent teams organized by function—PM here, design there, engineering over there. The new model: small, mission-focused pods that assemble for a quarter, execute, and re-form. LinkedIn calls this the Full Stack Builder approach. Think Navy SEALs—cross-trained, nimble, assembled for the mission at hand.

This isn't about eliminating specialization. It's about changing the ratio. You still need deep experts building the platforms that enable everyone else. But you need fewer handoffs, fewer review chains, and more people who can flex across the stack. The organizations that win will be the ones that can reconfigure fastest.

Bit: Permanent structures optimize for yesterday; pods optimize for tomorrow.

Pods form around missions, not functions—assemble, execute, disband, repeat.

Cross-training is the new competitive advantage—builders who flex across disciplines move faster.

Specialists don't disappear—they become force multipliers—building platforms that enable full-stack pods.

ACTION BYTE: Pilot one cross-functional pod this quarter. Pick a 90-day mission, assemble a small team that spans PM/design/eng, and measure velocity vs. your traditional structure. Let the data speak.

Can you scale without chaos?

It's peak season, so volume's about to spike. Most teams either hire temps (expensive) or burn out their people (worse). See what smarter teams do: let AI handle predictable volume so your humans stay great.

Bit 4: The Agent Ecosystem Playbook

LinkedIn didn't build one AI assistant—they built a purpose-specific ecosystem. Each agent solves one problem well, and an orchestration layer coordinates handoffs between them. Here's the lineup:

Trust Agent: Flags harm vectors and vulnerabilities in product specs. Caught issues in a years-old feature (Open to Work) that humans had missed.

Growth Agent: Critiques ideas against LinkedIn's unique loops, funnels, and historical A/B tests. Research Agent: Persona-savvy, grounded in prior studies and support tickets.

Analyst Agent: Queries the LinkedIn graph without SQL dependency.

Maintenance Agent: Auto-resolves ~50% of failed builds. Product Jammer: Orchestrates the whole ecosystem for the product development workflow.

Bit: One agent is a point solution; an orchestrated ecosystem is a compounding flywheel.

~50% of failed builds now auto-recover—engineers stay in flow while agents handle firefighting.

Trust Agent surfaced risks that sat dormant for years—institutional memory becomes searchable.

The orchestration layer multiplies value—agents that collaborate compound faster than agents that don't.

ACTION BYTE: Identify your three most repetitive knowledge-work bottlenecks. Evaluate whether a purpose-built agent—trained on your proprietary data—could collapse cycle time by 30%+. Start with one, then orchestrate.

Bit 5: Why Your Best People Adopt First

Here's a pattern that surprised LinkedIn's leadership: top performers are the earliest adopters of AI tools—and they see the biggest gains. If you expected AI to be a great equalizer, lifting average performers to new heights, the data doesn't support that story. The Matthew Effect applies: those who have, get more.

Why? Top talent has an innate drive to stay at the cutting edge of their craft. They don't wait for permission or formal rollouts. They experiment, iterate, and share what works. For leaders, this means your change management challenge isn't convincing your best people—it's giving permission and pathways to everyone else.

Bit: Top talent gives themselves permission; you just need to get out of the way.

Early adopters self-select from your best performers—find them and resource them.

Their wins become your proof points—success stories lower organizational resistance.

The equalizer myth is just that—a myth—AI amplifies existing capability gradients.

ACTION BYTE: Identify your top 5% this week. Give them explicit permission to experiment with AI tooling, protected time to explore, and a platform to share wins. They'll do your change management for you.

Bit 6: Sunday Funnies

Bit 7: The Golden Dataset Lesson

LinkedIn learned this the hard way: connecting an AI to your entire knowledge base and saying "reason over this" produces hallucinations, not intelligence. The model can't weight source quality. It doesn't know what matters. More data isn't better—curated data is.

The fix is labor-intensive but essential: "golden examples." LinkedIn's head of Trust manually filtered examples of what good looks like—not all the data, but the right data. It's the same principle that built their professional feed years ago. Quality training inputs produce quality outputs. If you're skipping curation to move fast, you're building on sand.

Bit: Garbage in, confident garbage out.

Broad access to undifferentiated data increases hallucinations—specificity beats volume.

Golden datasets require human curation—weeks of work, not a connector and a prayer.

What you feed the model is the most important investment—not the model itself.

ACTION BYTE: Before any enterprise agent deployment, allocate 2-4 weeks for dataset curation. Assign a domain expert to tag your "golden 100" examples. This work is invisible but load-bearing.

Bit 8: The Cultural Adoption Playbook

Rolling out AI tools and expecting adoption is enterprise magical thinking. Tomer Cohen's blunt take: "I see a lot of companies roll out their agents and just expecting companies to adopt. It doesn't work this way." Tools are necessary but not sufficient. If you want behavior change, you need to redesign the system that produces behavior.

LinkedIn's playbook: Change hiring to screen for AI fluency. Change performance reviews to include full-stack builder criteria. Replace the APM program with APB. Celebrate wins publicly—designers pushing PRs, PMs building dashboards without engineering, a UX researcher who became a growth PM using the new tools. Create visible success stories that lower resistance for the skeptical majority. Permission to experiment isn't enough; you need proof that experimentation pays off.

Bit: Announcements create awareness; incentives create adoption.

Hiring now screens for AI agency and fluency—signals matter at the door.

Performance reviews include cross-functional stretch—what gets measured gets optimized.

Visible wins fuel the flywheel—success stories are adoption infrastructure.

ACTION BYTE: Identify three AI adoption wins from the past quarter and publicize them widely—all-hands, Slack, internal newsletter. Make success visible to lower resistance.

The inspiration for this newsletter came from my favorite newsletter and podcast host Lenny Rachitsky and episode - Why LinkedIn is turning PMs into AI-powered "full stack builders” | Tomer Cohen (LinkedIn CPO)Until next time, take it one bit at a time!

Rob

P.S. Thanks for making it to the end—because this is where the future reveals itself.