8 bits for a Byte: The mandate for today's C-suite is clear: Lead Like an AI Transformation Architect. This involves not only championing the necessary cultural shifts and operational redesigns—as our first Byte passionately argues—but also deeply understanding the critical technologies that make it all possible. That’s precisely why AI Quick Bytes is subsequently taking you inside Anthropic's Model Context Protocol (MCP). We're peeling back the layers on this foundational element, ensuring you have the confident, clear understanding an architect needs. Join us for a session that bridges strategy with substance, and connect with our partners who are shaping the AI landscape.

In this week’s AI Quick Bytes, you will learn the intricacies of critical enablers like Anthropic's Model Context Protocol, taking a giant leap towards becoming a truly AI-Fluent Executive. You understand that building Resilient Enterprise AI isn't magic—it’s a potent blend of cutting-edge architecture, transformative culture, and visionary leadership.

But what happens when the blueprint meets the boardroom? When elegant protocols meet entrenched processes? If you're driven to bridge the gap between AI theory and enterprise-wide reality, and transform pilot projects into production powerhouses, this is your essential next step.

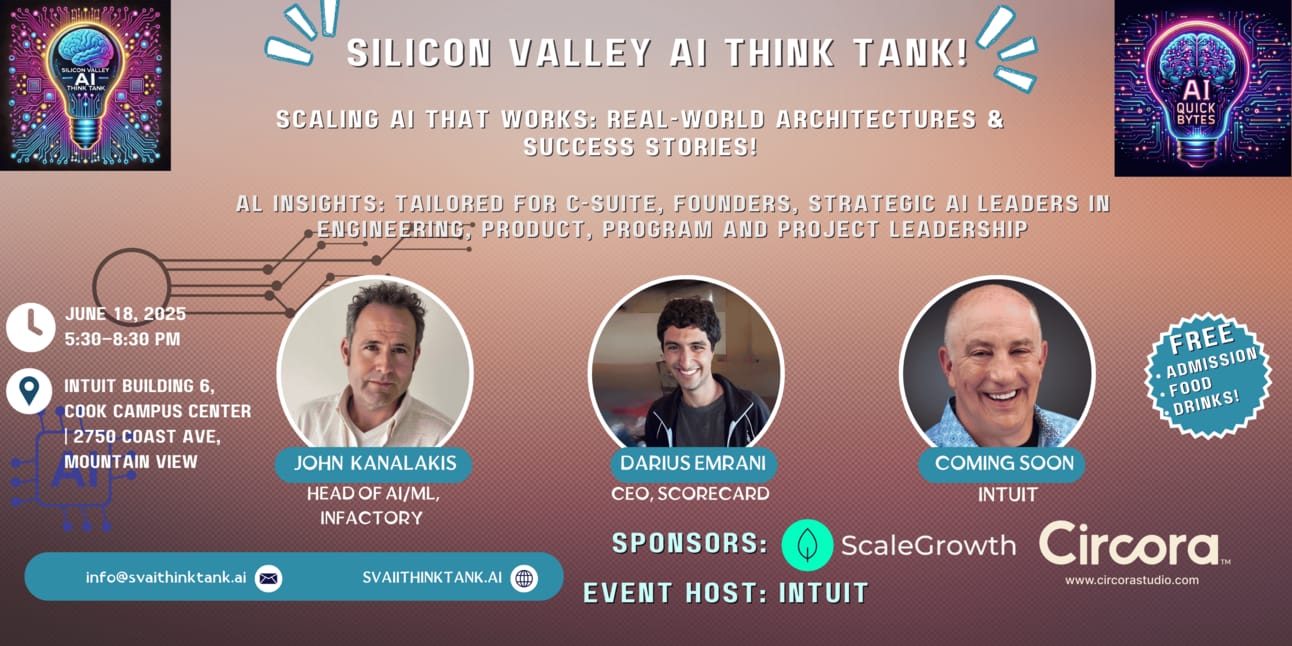

🚀 Unlock AI at Scale

Join us in the heart of Silicon Valley for an exclusive gathering designed to arm you with the connections, strategic insights, and actionable wisdom to lead your AI transformation with confidence. We’re bringing together the trailblazers—from Fortune 500 titans to agile innovators—who aren't just talking about AI, they're deploying it, scaling it, and defining its future.

This Isn't Just Another Meetup. This Is Where AI Strategy Ignites Action. Expect To:

Decode Success: Dive deep into real-world AI architectures that have conquered scale and complexity.

Forge Your Framework: Acquire strategic blueprints that fuse AI capabilities directly with hard business outcomes.

Lead the Transformation: Master the art of aligning technology, culture, and operations for sustained AI momentum.

Connect with the Vanguard: Engage with an elite lineup of speakers and peers actively shaping the AI frontier.

Whether you're architecting AI systems, steering strategic initiatives, or championing the AI-driven culture, this evening is curated for you. It’s a rare opportunity to move beyond the "what" and "why" you've explored this week, and immerse yourself in the "how"—with leaders who've navigated the path.

🌟 The Perks of a Pioneer:

Complimentary Access: Your seat is on us.

Gourmet Networking: Fuel connections with free food and drinks.

Unquantifiable ROI: Gain insights that could redefine your AI trajectory.

This is more than an event; it's a catalyst. Come ready to expand your strategic toolkit, build invaluable alliances, and co-architect the future of intelligent, resilient enterprise systems.

💥 Your Invitation to Lead: RSVP Today.

Spaces are limited for this curated experience. Secure your spot among Silicon Valley's AI leadership now and transform your AI vision into reality.

💡 Brought to you by Silicon Valley AI Think Tank & AI Quick Bytes – Guiding the future of AI to unlock human potential.

Start learning AI in 2025

Everyone talks about AI, but no one has the time to learn it. So, we found the easiest way to learn AI in as little time as possible: The Rundown AI.

It's a free AI newsletter that keeps you up-to-date on the latest AI news, and teaches you how to apply it in just 5 minutes a day.

Plus, complete the quiz after signing up and they’ll recommend the best AI tools, guides, and courses – tailored to your needs.

Let’s Get To It!

Welcome, To 8 bits for a Byte!

Bit 1: AI: More Than Product, It's a Leadership Mandate

A compelling perspective shared within my LinkedIn network offers a crucial reframe: AI's integration isn't simply about the next product cycle; it's a test of leadership and a call for profound organizational redesign.

Executive Summary:

The conversation around AI often gravitates towards its impact on product strategy and design, which is important. Yet, an even more critical perspective suggests that if AI initiatives remain confined to Product, they are destined to fall short. AI, when implemented at scale, is not a mere feature addition; it’s a paradigm shift in value delivery. This shift demands that organizations train their workforce for effective human-AI collaboration, fundamentally rethink workflows, incentives, and decision-making processes, and actively reshape their culture to embrace agility, ethical considerations, and robust judgment.

Such a change is a cultural metamorphosis, not a simple update to the product pipeline. While product and design teams are crucial, they cannot succeed in isolation. They must work in lockstep with executive leadership, operations, human resources, and other key departments. The alternative is the development of intelligent products for an organization unprepared to utilize them. The true promise of AI lies not in the artifacts we produce, but in the evolution of how we serve.

Strategic Implications:

Siloing AI within product or R&D teams will critically limit its transformative potential across the enterprise.

Successful AI adoption requires a strategic allocation of resources towards cultural change and workforce enablement.

The definition of "value" and how it's delivered will need to be re-evaluated through an AI-augmented lens.

Action Byte:

Mandate your executive team to read and discuss a foundational text on AI ethics and organizational change this month, and task them with presenting key learnings and proposed initial actions for your company.

Bit 2: MCP Demystified: The Strategic "Why" for AI Change Agents

With the stage set on AI leadership, it's time to unpack a key technical concept: Anthropic's Model Context Protocol (MCP). If the term MCP sounds like jargon you'd rather avoid, this Byte is for you—we're making it clear why understanding this protocol is a strategic imperative for any Lead AI Change Agent.

Executive Summary:

Anthropic's Model Context Protocol (MCP) offers an open, standardized solution to a pressing challenge: ensuring sophisticated AI models can reliably and securely draw upon the rich context—both data and tools—required for complex enterprise tasks. Drawing inspiration from proven standards like APIs for web services, MCP establishes a universal language. This allows AI applications to consistently communicate with any external system, whether it's an internal database accessible via local transports to cloud services via secure web protocols. It simplifies what was previously a complex mesh of custom-built, often fragile, integrations, and its evolving nature is transparent through public updates and contribution channels.

For you as a leader, MCP’s strategic value lies in its power to impose order on the often-turbulent AI development process. Historically, teams frequently duplicated efforts, creating siloed and incompatible methods for providing context to AI models, which impeded progress and heightened risk. MCP paves the way for a more integrated, "plug-and-play" AI ecosystem, supported by practical examples and clear architectural documentation. It enables application developers to create an MCP client once for an application, which can then readily connect to numerous MCP servers (representing various data sources or tools) without further integration overhead, fostering both accelerated development and strategic adaptability in your AI deployments.

Strategic Implications:

MCP champions interoperability, significantly reducing vendor lock-in for both LLM technologies and associated tooling by standardizing key integration points.

A clear understanding of MCP allows Lead AI Change Agents to make more informed decisions regarding AI infrastructure investments, prioritizing standards that foster agility and future scalability.

It provides a clearer framework for data governance and security in AI systems by centralizing and standardizing how context is fed to and accessed by models.

Action Byte:

Convene your AI and Machine Learning platform leaders to review your current methods for tool and data integration in LLM projects. Benchmark these against MCP's principles to identify immediate opportunities for standardization, efficiency improvements, and risk reduction.

Bit 3: MCP's Enterprise Edge: Speed, ROI, and the Microservices Model for AI

Imagine a world where your AI teams aren't constantly rebuilding data connections. Anthropic's MCP offers a path there, presenting a compelling 'microservices model' for AI context that can transform how Lead AI Change Agents structure and speed up their enterprise AI initiatives.

Executive Summary:

The enterprise value of Anthropic's Model Context Protocol (MCP) lies in its ability to dismantle internal silos and streamline the path from AI concept to production. For a Lead AI Change Agent, this means achieving greater organizational velocity. MCP enables this by allowing enterprises to treat access to data and tools as managed "services." A team responsible for a specific capability, like a proprietary knowledge base or a complex internal API, can expose it as an MCP server. This one-time effort makes that capability readily and consistently available to any AI application or agent framework within the company that speaks MCP.

This "service-oriented" approach to AI context, much like the microservices architecture transformed traditional software, means AI project teams no longer need to be experts in every underlying data system. They can simply "subscribe" to the MCP servers they need, accelerating their development and allowing them to focus on the unique logic of their AI application. This not only makes individual teams more productive but also makes the entire AI development ecosystem within the enterprise more efficient, resilient, and quicker to adapt—key ingredients for maximizing the return on your AI investments.

Strategic Implications:

MCP helps transform internal data and tools into discoverable, reusable "AI-ready" assets accessible across the enterprise.

It promotes a culture of internal collaboration and shared ownership of AI enablers, breaking down data silos.

By simplifying integration, MCP lowers the barrier for experimenting with new AI models or tools, accelerating the innovation pipeline.

Action Byte:

Initiate a two-pronged approach to unlock MCP's value: First, calculate the average person-hours spent per AI project on custom data/tool integration over the past year. Use this to baseline current costs and project potential ROI from adopting an MCP-like strategy for your top 3 most common integration points, then present this business case to your steering committee. Second, concurrently pilot the creation of MCP servers for 2-3 of these "high-demand" internal tools or data sources, document them in an internal registry, and actively promote their use to foster adoption and measure tangible efficiency gains.

Bit 4: MCP's Core Design: Tools, Resources, & Prompts as Levers for Control

Effective AI requires nuanced control over how models interact with your enterprise context. MCP achieves this through three key components: Tools, Resources, and Prompts. For Lead AI Change Agents, these aren't just technical terms, but levers for strategic control and operational flexibility.

Executive Summary:

Anthropic's Model Context Protocol (MCP) provides its nuanced control through a clever division of labor among three core components: Tools, Resources, and Prompts. As a Lead AI Change Agent, recognizing their distinct roles is key to envisioning robust AI integrations. Tools empower the AI model itself to become an actor; the MCP server exposes capabilities (like API calls or database queries), and the LLM autonomously decides when and how to use them to achieve a goal. This is the engine for agent-like behaviors where the AI takes initiative within defined boundaries.

Then there are Resources: these are data payloads—documents, images, dynamic data structures—that the server provides to the client application. The application, not the model directly, controls the lifecycle and presentation of these resources. For instance, an application might fetch a 'resource' (e.g., a customer record) and then decide which parts to show a user or feed to an LLM. Finally, Prompts are user-driven: predefined templates for common tasks (like a /generateReport command) that ensure consistency and leverage established workflows. MCP's genius lies in this careful separation, ensuring that control over AI interactions is distributed appropriately across the model, the application, and the end-user, providing layers of operational safety and precision.

Strategic Implications:

The "Tools" mechanism enables LLMs to move beyond just text generation to actively performing tasks within your existing enterprise systems.

"Resources" allow for curated and secure exposure of enterprise data to AI applications, with the application layer acting as a crucial intermediary.

Standardized "Prompts" can significantly improve user adoption and efficiency for AI-powered features by simplifying common interactions.

Action Byte:

Review a key AI initiative in your portfolio and identify which types of interactions would best map to MCP's Tools (model-led actions), Resources (application-managed data), or Prompts (user-initiated templates). This clarifies how MCP's structure could bring better control and efficiency.

News you’re not getting—until now.

Join 4M+ professionals who start their day with Morning Brew—the free newsletter that makes business news quick, clear, and actually enjoyable.

Each morning, it breaks down the biggest stories in business, tech, and finance with a touch of wit to keep things smart and interesting.

Bit 5: MCP: The Backbone for Autonomous & Effective Enterprise AI Agents

Understanding MCP's components sets the stage for its most exciting enterprise application: powering the next generation of AI Agents. For Lead AI Change Agents, MCP isn't just about better integrations; it's about building the backbone for truly autonomous and effective AI assistants.

Executive Summary:

The true promise of Anthropic's Model Context Protocol (MCP) for enterprises crystallizes in its capacity to underpin advanced AI Agents. As a Lead AI Change Agent, consider MCP the key to unlocking more autonomous AI. It achieves this by providing the essential infrastructure for the "augmented LLM"—an AI core extended with robust connections to external tools for performing actions, retrieval systems for accessing knowledge, and memory for maintaining context and state. MCP acts as this critical interfacing layer, standardizing how the LLM connects to these vital augmentations.

This standardization means that AI agents built with MCP can be more dynamic and adaptable. They aren't limited to pre-defined functions; instead, they can discover and utilize new tools and data sources exposed via MCP servers as they become available. For your enterprise, this means your agent development teams can concentrate on the agent's core logic, decision-making capabilities, and the overall "agentic loop" (task execution, response analysis, and iteration), while relying on MCP to handle the complex world of external integrations in a consistent and scalable manner.

Strategic Implications:

MCP enables the development of AI agents that can operate more autonomously and effectively within complex enterprise environments.

By standardizing access to tools and data, MCP allows for the creation of more versatile agents that are not tightly coupled to specific backend systems.

This approach supports the strategic goal of creating "self-improving" or "self-expanding" agent capabilities as new MCP servers (tools/data) are added to the ecosystem.

Action Byte:

Identify a high-value, repetitive enterprise workflow that could be significantly improved by an AI agent. Task a team to design this agent based on the "augmented LLM" concept, specifically outlining how MCP would facilitate its connections to necessary tools and data.

Bit 6: Advanced MCP: Architecting Sophisticated & Hierarchical AI Agent Systems

As AI agents tackle more complex enterprise tasks, their underlying architecture must evolve. For Lead AI Change Agents, understanding how MCP's 'Sampling' and 'Composability' features facilitate intricate, even hierarchical, agent systems is key to future-proofing your AI strategy.

Executive Summary:

Lead AI Change Agents looking to build next-generation AI solutions will find critical enablers in Anthropic MCP's advanced features: Sampling and Composability. Sampling provides a clever mechanism for an MCP server (like a specialized tool) to request intelligent processing from the LLM that the client application controls. This means a tool server can, for example, ask the client's LLM to help it understand a complex user query or to format data in a specific way, all while the client retains full oversight of the LLM's use, ensuring consistency and control.

Composability is the principle that any MCP component can be both a client (consuming services) and a server (offering services). This is the architectural key to building sophisticated, multi-agent systems. A user might interact with a primary agent (an MCP server). This agent can then, acting as a client, orchestrate a team of specialized sub-agents (themselves MCP servers for specific tasks like data retrieval or analysis), which in turn might call other MCP tool servers. This creates a powerful, flexible hierarchy where agents can collaborate, much like human teams, to address complex enterprise challenges.

Strategic Implications:

Sampling allows for more intelligent and interactive MCP servers without the need for each server to host its own LLM, promoting efficient resource use.

Composability supports the design of modular and scalable AI agent architectures, allowing for specialization and easier maintenance of agent capabilities.

Together, these features pave the way for "AI ecosystems" within the enterprise, where various agents and services interact in a standardized, controllable manner.

Action Byte:

Commission a design sprint focused on a complex, multi-stage enterprise problem. Task the team with creating a conceptual model of how a "team" of hierarchically organized, MCP-enabled AI agents, leveraging sampling and composability, could automate or significantly augment this process.

Bit 7: Operationalizing MCP: Remote Servers & Secure Authentication

Sophisticated AI agents and services are powerful, but how do we deploy and access them securely and scalably across the enterprise? For Lead AI Change Agents, MCP's support for Remote Servers and OAuth 2.0 authentication is the answer to operationalizing your AI vision.

Executive Summary:

To truly operationalize an AI vision built on interconnected services and agents, Lead AI Change Agents require robust deployment and security mechanisms. Anthropic's MCP is directly addressing this with its support for Remote Servers and industry-standard OAuth 2.0 authentication. The shift towards Remote Servers means MCP-enabled capabilities are no longer confined to local machine setups; they can be hosted on dedicated infrastructure and accessed via URLs, using technologies like Server-Sent Events (SSE). This vastly improves discoverability and ease of use, removing significant hurdles for developers and end-users alike.

Complementing remote access, MCP's adoption of OAuth 2.0 ensures these connections are secure. When an AI application (MCP client) connects to a remote MCP server that fronts an enterprise service (e.g., a CRM), the MCP server handles the OAuth 2.0 authentication flow with that end service. The MCP server securely manages the service's access token, providing the client application with a separate session token. This model not only simplifies secure access for the client but also centralizes critical authentication logic at the MCP server level, aligning with enterprise security best practices and making it easier to deploy a wider range of AI-powered services.

Strategic Implications:

Remote server capability significantly enhances the scalability and manageability of your enterprise's MCP service landscape.

OAuth 2.0 provides a familiar and trusted authentication framework, making it easier to integrate MCP services with existing enterprise security policies.

The combination reduces developer friction, enabling faster creation and adoption of secure, remotely accessible AI tools and data sources.

Action Byte:

Task your AI infrastructure team to define a reference architecture for deploying and managing Remote MCP servers within your enterprise, including guidelines for OAuth 2.0 integration with key internal and external services.

Unbiased Business Insights, Every Week

Get a weekly knowledge digest of business insights from 1440’s Business & Finance newsletter. Expect concise rundowns, context-rich visuals, and curated links to keep you ahead of the finance curve. Join 1440 for crisp explanations of business trends —no MBA required.

Bit 8: The Future of MCP: A Discoverable, Trusted Ecosystem for Evolving AI

To unlock MCP's full potential for interconnected AI, robust mechanisms for service discovery and trust are essential. The planned MCP Registry and 'Well-Known URLs' are precisely these, offering Lead AI Change Agents a glimpse into a future of more autonomous and adaptable AI systems.

Executive Summary:

As enterprises embrace an ecosystem of MCP-enabled services and agents, two upcoming features promise to revolutionize how these components are discovered, trusted, and utilized: the MCP Registry and Well-Known URLs. The MCP Registry will serve as a centralized, official metadata service where organizations and developers can publish and find MCP servers. This addresses the current fragmentation, providing a trusted source to identify a server's capabilities, its protocol (e.g., local or remote), its builder, and critically, its verification status (e.g., an official server blessed by a vendor like Shopify). This registry will also handle versioning, ensuring clarity as servers evolve.

Complementing this, Well-Known URLs (e.g., yourcompany.com/.well-known/mcp.json) offer a direct, top-down discovery path. An AI agent wanting to interact with a specific domain can check this standardized URL to find its official MCP endpoint and capabilities. Together, these mechanisms are foundational for enabling "self-evolving agents"—AI assistants that can dynamically discover, verify, and integrate new tools and data sources from the registry or known URLs on the fly, dramatically expanding their autonomy and adaptability without needing to be pre-programmed with every possible capability.

Strategic Implications:

The MCP Registry will significantly reduce friction in discovering and integrating trusted MCP servers, accelerating AI development.

Well-Known URLs provide a standardized way for enterprises to advertise their official AI-accessible MCP endpoints, enhancing interoperability.

These features collectively pave the way for AI agents that can autonomously expand their skill sets by discovering new tools and data.

Action Byte:

Begin planning for an internal MCP Registry or a process to vet and list trusted external MCP servers. Simultaneously, task your web architecture team to explore implementing a ".well-known/mcp.json" endpoint for your primary enterprise domain.

This Week's Byte: The Architect's View

This edition of AI Quick Bytes has been about empowering you to Lead Like an AI Transformation Architect. We began by underscoring a fundamental truth: successful AI adoption is far more than a product or technology push; it’s a profound leadership challenge demanding deep cultural and operational shifts across your entire enterprise. This holistic view is paramount.

But true architectural leadership also requires a confident grasp of the critical enablers that make transformation possible. That’s why we dedicated the majority of this issue to demystifying Anthropic's Model Context Protocol (MCP). From its core purpose of standardizing AI’s access to context, to its enterprise value in fostering agility and ROI, its specific components (Tools, Resources, and Prompts), its foundational role in powering sophisticated AI agents through capabilities like Sampling and Composability, its practical deployment via Remote Servers and robust authentication, and finally, its future as a discoverable, trusted ecosystem—understanding MCP is no longer optional. For the Lead AI Change Agent, mastering such foundational protocols is key to not just navigating the AI era, but actively shaping it with strategic foresight and operational excellence. The "why now" is clear: the future of intelligent enterprises is being built on these very principles of integration, standardization, and controlled autonomy.

We're seeing the blueprints for truly integrated AI emerge, demanding that we, as leaders, be both visionary and grounded, equally comfortable discussing cultural transformation and foundational protocols. The question I'm pondering this week is: which "critical enabler" within your own organization, if unlocked by a new standard or approach, could create the most significant cascade of innovation?

Until next time, take it one bit at a time!

Rob

P.S.

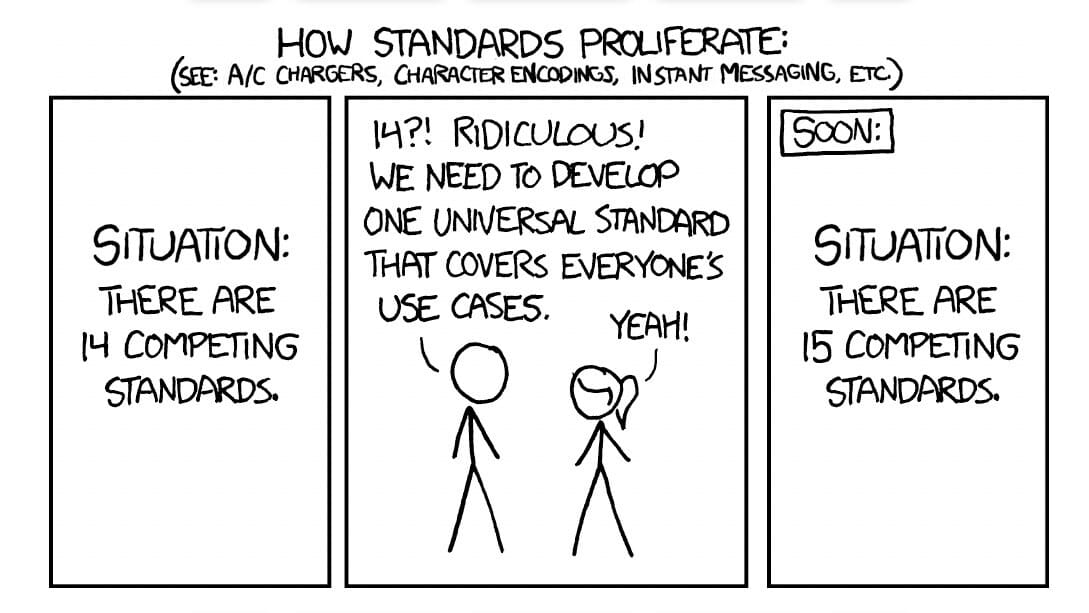

I can’t go three newsletters without a Sunday Funny!

Join thousands of satisfied readers and get our expertly curated selection of top newsletters delivered to you. Subscribe now for free and never miss out on the best content across the web!